Let's explore one of the best services for events on AWS

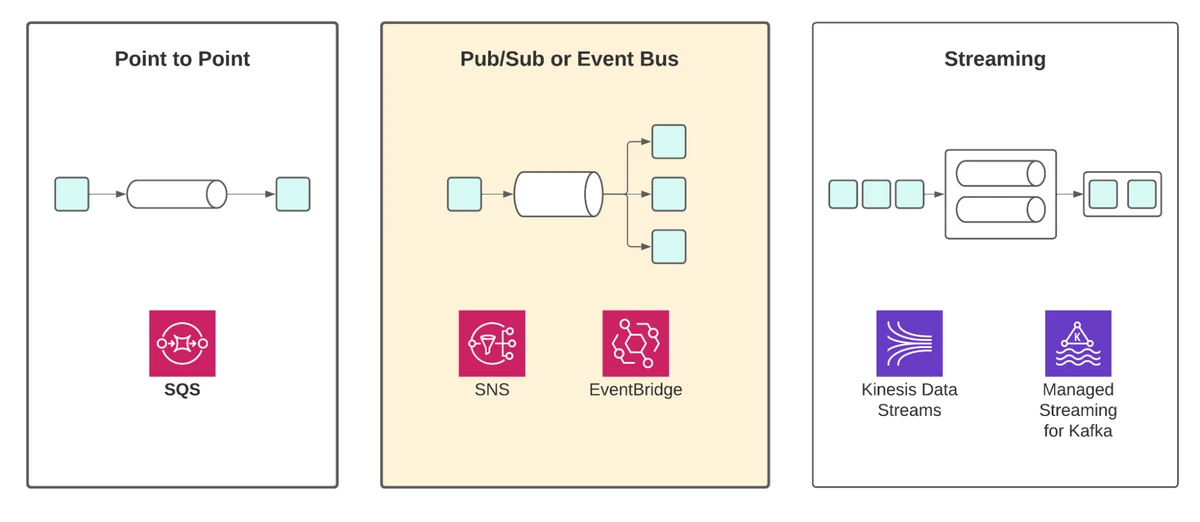

EventBridge has generated a lot of buzz since it was announced in mid-2019. Compared to prior services, such as SQS, SNS, Kinesis and Kafka, EventBridge is a product that follows the spirit of serverless, serving a much broader set of use cases with very little up-front configuration required.

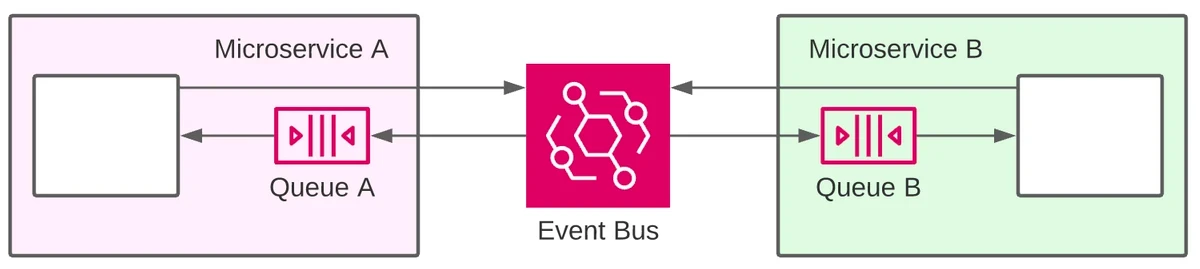

Within our categorisation of event services, EventBridge fits more closely with the Pub/Sub messaging category. However, Pub/Sub typically deals with messages relating to a topic, whereas EventBridge is an event bus, allowing messages to be sent and consumed without having to create individual topics.

EventBridge has a broad number of applications and use cases:

- When a message is to be sent to multiple consumers with minimal coupling, and when the number of consumers can grow over time.

- To build an event backbone: a general-purpose bus where lifecycle events can be published without having to consider the needs of all consumers up front. An event backbone provides support for event-driven applications for many message types where the event producer and consumers are not necessarily aware of each other. We will discuss a more concrete example for this use case in the next section.

- To create integrations between multiple components, AWS applications, different AWS accounts and between applications running on AWS and third-party vendors. It’s useful to think of EventBridge as a go-to solution for any type of integration where loose coupling is required.

- To handle events by sending them directly to other AWS services. EventBridge is well-integrated to many other AWS products, reducing the amount of custom code required for data transformation and forwarding.

On the other hand, EventBridge is not well suited to some cases, in particular:

- Where near real-time latency is required consistently.

- When strict ordering of messages is required.

Building an event backbone with EventBridge

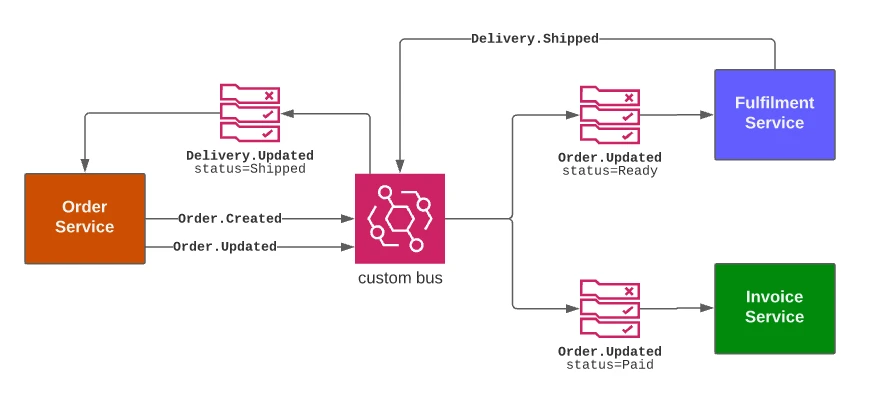

The figure below shows a simple version of an eCommerce application, with three separate components: the Order service, the Fulfillment service, and the Invoice service:

When an Order is Created or updated, the Order Service sends Created/Updated events to the EventBridge bus.

Similarly, the services responsible for fulfillment and invoicing both handle Order status events, but based on different Order statuses.Finally, the Order service itself reacts to Delivery status events and updates the Order accordingly.

With this architecture, we built a system where the services don’t need to be aware of the origin of the events they receive or the destination of any events they sent, which removes any coupling between them.

If you are really embracing the event backbone concept for all applications and services, it’s likely that these will be running in different AWS accounts. EventBridge supports cross-account propagation by using a cross-account bus as a rule target. To achieve the cross-account event backbone, you would create an EventBridge bus in each account. To propagate events across accounts, you have some options:

- Create rules in every bus to propagate to every other cross-account bus. This is onerous and introduces a lot of cross-application awareness, increasing coupling.

- Create a global “hub” event bus. Every application then publishes to this bus rather than their “local” account bus. The global bus has rules to forward events to every account except the account that sent the event. Each application then creates rules on their local bus only.

Note that once you have a second bus as a rule target you cannot use EventBridge rules in that second bus to forward events to a third bus!

EventBridge Features

Let’s run through the main features and characteristics of EventBridge.

EventBridge is a managed service

EventBridge is a fully managed service, with AWS taking care of distributing the infrastructure across availability zones (AZs).

EventBridge supports at least once delivery. As with most distributed event systems, this means there are cases where the services cannot know whether an event has been delivered completely or not so it is going to send the same event again. Ideally event processors must be able to handle duplicate delivery, usually by implementing idempotency. Idempotent processing means that any message delivered more than once should have the same effect as if it had only been delivered once.

EventBridge Rules allow you to define patterns to capture events and deliver them to specific targets. This feature is one of the most important differentiators for EventBridge. Instead of subscribing to events in a given topic, you define patterns along with a number of targets.

EventBridge Integrations

Compared to SNS or any alternative, EventBridge targets provide the largest number of AWS service integrations by far. This includes API Gateway, EC2, Firehose, Glue, Kinesis, Lambda, Step Functions, SQS and SNS (the full list is available here).

Additionally, EventBridge can integrate to any arbitrary HTTP API, with support for authorization and retries. The target configuration can include transformations, so the message payload can be made to fit the structure required by the destination. Delivery to an event target is retried for up to one day in the event of failure.

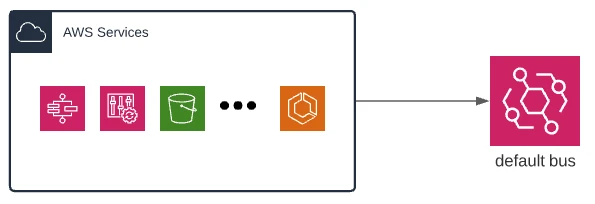

AWS native events are sent to the default bus out of the box, without any prior configuration. You can immediately create rules that match the structure of events sent by many AWS services. Examples include:

* An EC2 Spot Instance has been interrupted

* A Step Function changed state

* An object was created in an S3 bucket

* Any CloudTrail event (see the full list)

There is a “gotcha” here! The delivery guarantees for events differ between AWS services, so you should always check whether a service provides guaranteed delivery or best-effort delivery.

Partner events give you a simple integration with many of the supported SaaS partners, including Salesforce, Auth0 and PagerDuty. This can take away a lot of the heavy lifting involved with providing webhooks and outbound HTTP integrations with the associated complexity of failure handling, scaling and authentication.

EventBridge schema registry

The Schema Registry provides validation schemas for events. As you may imagine, when you have hundreds of message types flowing through your event bus, it becomes important to know what structure to expect. Here, you have the option to create the schemas directly or have them discovered by EventBridge based on the events already seen on the bus. The schema registry can then provide generated code bindings in Python, TypeScript or Java.

Other features

By default, events that don’t match any rule are not delivered or stored anywhere. By enabling the EventBridge Archive, events can be stored for a configurable number of days or indefinitely. The second part of this feature allows you to replay the archive for a specific time period.

Schedules allow you to send messages to a target based on a schedule. Instead of defining pattern-based rules, you create a schedule expression using cron syntax or a rate, such as every 1 hour.

Fun fact! If you have ever used scheduled events to trigger a Lambda, you might have used CloudWatch Events to do this. EventBridge is a rebranded and expanded version of CloudWatch Events, which originally supported schedules and AWS service events only.

Dead-letter queues provide you a way to use a reliable SQS destination for events that could not be sent to a target.

How to use EventBridge

The fundamental resource involved is an EventBridge Bus. Each account comes with a ‘default’ bus. This is where events from AWS services will arrive. You can use it for custom events too, but you might prefer to create a separate bus, since it makes it easier to secure with IAM policies.

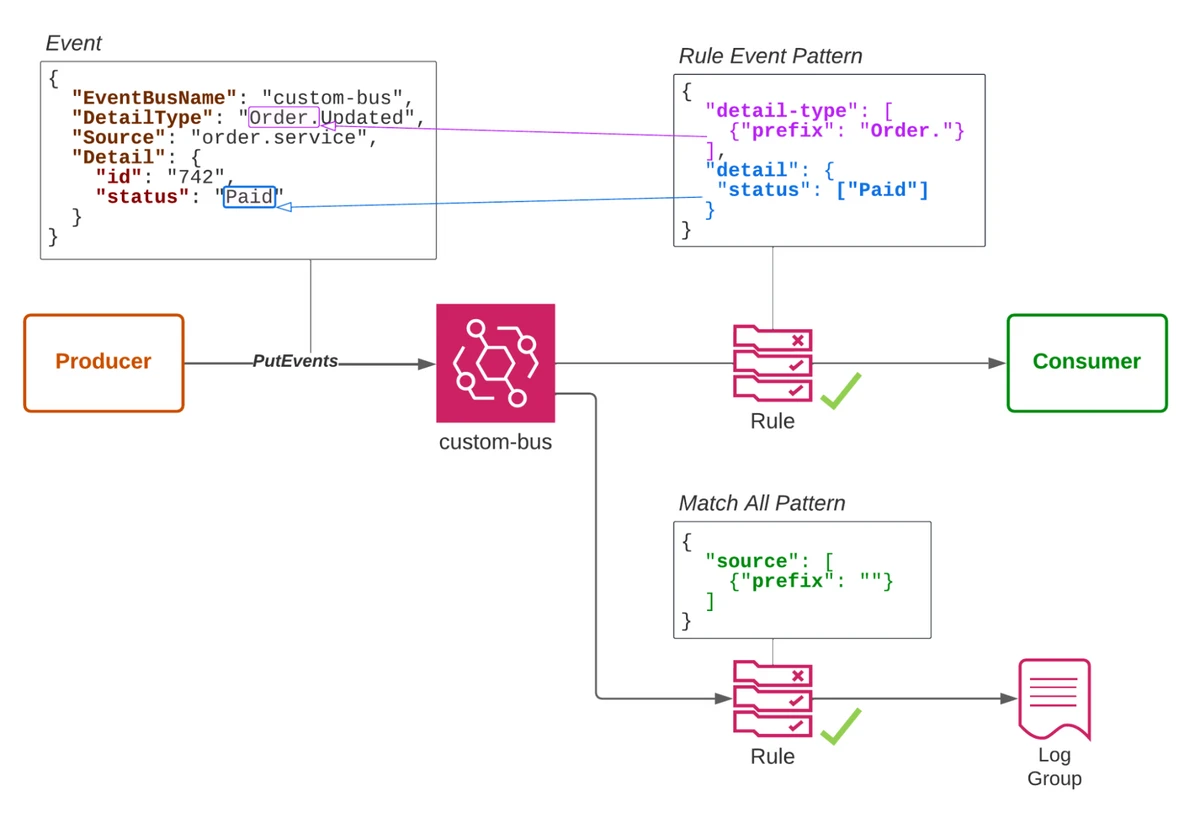

Once you have chosen a bus, you can immediately send events. If you want to process AWS or Partner events, you can skip this part and jump to the section on consuming messages. You don’t need to pre-create any topic or channel. Using the PutEvents API, you can send up to 10 messages in one call. Each event can contain the following properties:

- Source: This defines the message origin. For AWS services, this might be something like ‘aws.config’ but for your custom events you could specify the application or service name, like ‘order.service’.

- DetailType: This usually denotes the event type, for example, “Order.Created”

- EventBusName: The name of your custom bus or default.

- Detail: This is the JSON-encoded payload of the message and it can contain relevant details about your event (e.g. order ID, customer name, product name, etc.)

The event structure can represent a contract between the producer and the consumer. Once a consumer strictly relies on fields being available in the message, you have semantic coupling between producer and consumer. There is a balance to be struck between including as much detail as possible in the message and reducing this semantic coupling. Too little data means that consumers will likely have to go and fetch extra data from the originating system.

Too much data for your events means consumers come to rely on all that data in a certain structure, semantically coupling it to the producer and making it hard to change the structure later. A reasonable approach here is to start with less data and add properties incrementally as the need arises.

To receive an event using a pattern-based approach, you can create a rule using the AWS CLI, SDK, API, or CloudFormation. The figure above shows a custom bus with two rules. The first rule captures any orders with a paid status. It uses content filtering to match events with a detail type starting with “Order.” and with a status field that exactly matches the string “Paid”. The second rule uses an empty string prefix match on the source property to match all events in the bus. There is no other simple way to match all events, because you have to specify a condition on at least one property.

When creating these rules, you will also specify up to five targets. For example, you might send the event to a CloudWatch log group, to Firehose and to Lambda. The example shown above has one target in each rule. The paid order events are sent to a consumer, which could be an SQS queue or a Lambda function. All events are sent to a CloudWatch log group. Logging all events like this is very useful for auditing and troubleshooting.

Some destination types allow you to provide detailed configuration, for example:

- You can provide the partition key if the target is a Kinesis Data Stream

- If the target is an SQS FIFO queue, you can select the message group

- For ECS task targets, you can provide parameters for the container

Quotas, Limitations and Pricing

There are not many hard constraints with EventBridge. The message throughput soft limit varies per region but you can request a quota limit increase of up to 100,000 transactions per second (TPS). Beyond that, you will need to talk to your AWS account manager so they can accommodate your scale.

The main consideration to look at is the latency guarantee. The documentation says that latency for EventBridge delivery is “typically around half a second”. We have observed latencies that are much shorter but also some outliers that exceed this, so it’s important to be aware if you have strict latency requirements. You can compare this latency to SNS, which is “typically <30ms”.

As already mentioned, you have up to five targets per rule and you can create 300 rules per bus, but that is a soft limit that can be increased.

When thinking about pricing, you need to think about the number of events. At the time of writing, you are looking at paying $1 USD per million events. There are additional charges for HTTP destinations, Archive, Replay and Schema Discovery.

Integration with SQS

EventBridge has retries for up to 24 hours. Beyond that, you can configure an SQS dead-letter queue (DLQ) and put in place a system to monitor and process those messages.

If you need high durability in a system with EventBridge, the consumer might use an SQS queue as a rule target. Events can then be processed from that queue, acting as a kind of load balancer so the consumers can scale as well as surviving cases where the consumer is unavailable or faulty. This is a topic we covered extensively in the SQS article from this series.

Observability and Testing

When using a fully-managed bus like EventBridge, you have to adapt to the fact that you cannot see what’s going on under the hood! If a pattern is not being matched or an event doesn’t make it to the target as you would expect, you can’t just look at an internal debug log to troubleshoot. Instead, you have to look at the metrics and tooling available to you. There are a number of important metrics to observe.

- TriggeredRules and MatchedEvents can be used to see if the rules are configured correctly and the rule patterns match as you expect.

- Invocations and, in particular, FailedInvocations show you what targets are being triggered and if any targets are not working correctly. The InvocationsSentToDlq metric will increase if EventBridge has given up retrying.

- ThrottledRules is another metric that should be watched, indicating that you may need to request a quota increase.

EventBridge provides a CLI, API and a sandbox interface for you to test patterns against a sample event.

If you have trouble finding out why an event doesn’t seem to be sent or delivered, you could create a rule with a broad pattern that sends all events to a CloudWatch Log Group, as shown in the example above. The events in this log can be used to test against the pattern.

If the pattern is working successfully, there is likely an IAM policy issue. Each Rule and Target can have an IAM policy, but the destination resource might also have a Service Policy that needs to be configured to allow EventBridge events.

There are some great third-party tools for interacting with EventBridge that can make the process of troubleshooting and testing events easier:

Paul Swail has compiled a very useful and detailed article on EventBridge testing which covers usage of SQS and CloudWatch logs to verify event delivery.

SNS vs EventBridge

Since EventBridge and SNS are both in the Pub-Sub/Message bus category, let’s compare their merits.

- In general, EventBridge is simpler, since you don’t have to create topics and subscriptions.

- EventBridge rules are more flexible than SNS message filters, since you can pattern match on the message payload as well as metadata attributes.

- SNS provides better latency – 30ms vs. 500ms

- SNS supports FIFO when combined with SNS queues. With EventBridge, ordering is not guaranteed.

- SNS supports a larger number of consumers (12.5 million!) by default whereas EventBridge gives you a soft limit of 300 rules and a hard limit of 5 targets per rule.

- EventBridge pricing is approximately double that of SNS, depending on the features and subscriptions used.

- EventBridge supports many more target types than SNS; however, if you want to deliver email or SMS messages, SNS supports both out of the box.

In general, it’s a good idea to adopt an EventBridge-first approach. Its simplicity and flexibility will work for the majority of use cases. You can fall back to SNS if you need low latency, FIFO support or email/SMS messages for specific cases.

Summary

EventBridge is a great choice as a general-purpose event bus for any application on AWS. As long as latency and message ordering are not critical considerations, you will benefit from a lot of features, flexibility and easy integrations, without all the complexity and configuration that come with traditional message bus products and streaming services. If you want to see more about how EventBridge is used in real-world deployments, check out Sheen Brisals’ series of articles on the EventBridge and his talk from AWS Community Day on YouTube.

In our AWS Bites podcast, we covered all of the main AWS event services in depth as well as a special episode where we cover the pros and cons of each. Check out the Event Services Playlist and let us know what you think in the comments section.

If you are starting to use AWS or EventBridge and you are looking for professional help, get in touch with the team at fourTheorem, we’d be happy to assist!