This is the first in a series of four posts exploring the history and development of AI. I personally find this a fascinating topic and one that I needed to research in more detail whilst co-writing my latest book: AI as a Service, available through Manning Publications here: https://www.manning.com/books/ai-as-a-service.

My aim is to explore the subject at high level and not to get into the technical details per se. Whilst I can’t promise to make you an AI expert through these posts, we can at least arm you with some interesting nuggets to impress at your next cocktail party!

While it is true that we have yet to achieve artificial general intelligence, machines that can reason about the world in a manner similar to humans, enormous progress has been made of late in the field. AI is certainly one of the hottest topics in our industry at the moment and is likely to remain so for the foreseeable future.

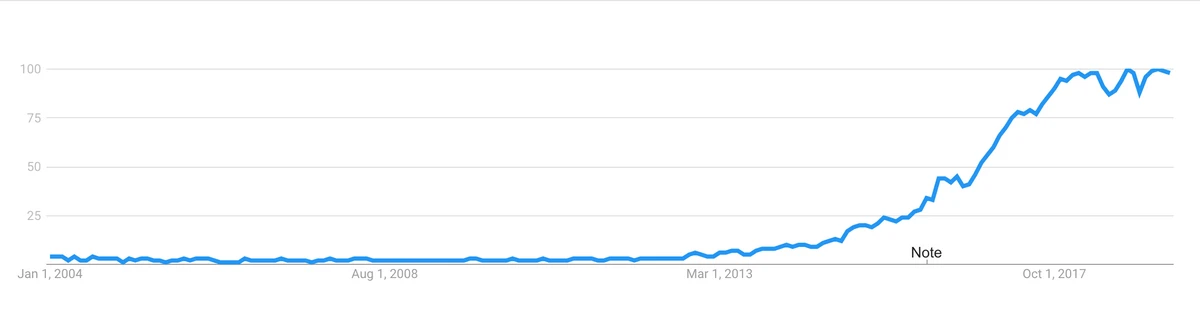

Figure 1 below shows how interest has grown in the topic over the last few years.

Figure 1 Google search trends for ‘Deep Learning’. Source.

So why the sudden interest in AI? How come AI and machine learning are increasingly in demand? To understand this we will need to get a basic grasp of the history of AI and how we got to where we are today.

In this first post we examine the very early development of AI from ancient times to the 1950s and the first AI winter.

What is AI?

Before we get started, we should attempt to define what AI is. Artificial Intelligence is a term that has grown to encompass a range of techniques and algorithmic approaches in computer science.

To many people, it can often conjure up images of out of control killer robots, mainly due to the dystopian futures portrayed in the Matrix and Terminator movies. A more sober and sensible definition of the term might be:

Artificial Intelligence refers to the capacity of a computer to exhibit learning and decision making capabilities as seen in humans and other animal species.

Given this as our working definition, let’s take a look at the historical development of AI.

The Ancients

Humans have for a long time considered the notion of creating artificial replicas of themselves, from the golden robots of Hephaestus the Greek god of blacksmiths to the clockwork Automata created by many civilizations through the centuries. However it was not until the 17th century that philosophers such as Leibniz and Descartes began to explore the notion that human thought could be described in a systematic, mathematical manner and therefore could perhaps be amenable to replication by non human machines.

You may have come across these two intellectual giants before. Rene Descartes is most known for the statement ‘Cogito ergo sum’ or ‘I think therefore I am’. Leibniz is perhaps best known for the development of calculus at the same time as Isaac Newton. However these two philosophers also contributed to the development of AI. Descartes in his work ‘Discourse on the Method for Conducting One’s Reason Well and for Seeking the Truth in the Sciences’ considered the question that machines might be able to exhibit the same faculty of consciousness as humans. Whilst Leibniz postulated a universal language of reasoning the ‘characteristica universalis’ that might reduce all forms of argumentation to calculation.

Whilst these early ruminations trace an interesting line of thought, it took until the early 20th century for thinkers such as Russel and Boole to put forth a more formal definition of these ideas. These developments coupled with the brilliant work of mathematician Kurt Godel, led to the foundational work of Alan Turing. Turing’s key insight was that any mathematical problem that could be formally defined within the limits of Godel’s incompleteness theorem could, in theory, be solved by a computing device, the so called Turing Machine.

The Turing Machine, first postulated in 1936 is a hypothetical computer. Despite being very simple, the machine can simulate any computer algorithm. The Turing machine ‘anticipated’ the development of micro processors and stored memory computer programs. For a full description of the Turing machine see https://en.wikipedia.org/wiki/Turing_machine.

First Steps

From these early theoretical underpinnings, the first more formal steps towards thinking machines started in the 1940’s. Early investigations in neuroscience had revealed that the brain was comprised of an electro-chemical network of many individual nerve cells. Not unreasonably, these early AI researchers set out to duplicate the behaviour observed in human brain cells by constructing a series of analog electronic devices. These early steps saw the construction of the worlds first artificial neural network, snappily named ‘Stochastic Neural Analog Reinforcement Calculator’ or SNARC for short.

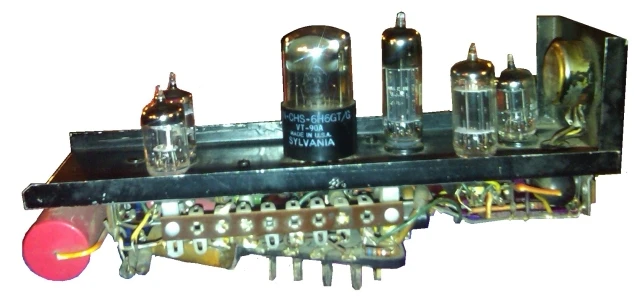

SNARC was developed by Marvin Minsky and Dean Edmonds in 1951. The system comprised of a network of 40 analog neurons connected to a robot mouse. The system simulated the problem of a rat trying to find it’s way out of a maze. Below is a photograph of a single neuron from the machine which was sadly cannibalized for parts and no longer exists.

Figure 2 A single SNARC neuron. Source.

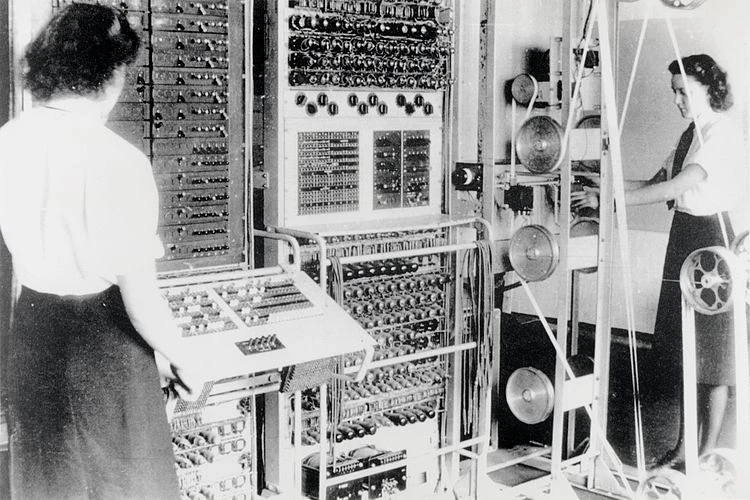

During the same period there had also been significant progress made on the development of computing machines, driven by the imperative of breaking the war time German Enigma codes, the Bombe and Colossus systems had been developed at Bletchley Park in the UK by Alan Turing and Tommy Flowers respectively.

Figure 3 A Colussus Mark 2 computer circa 1944.

The Birth Of AI

During the 1950s hopes were high that it would indeed be possible to build an artificially intelligent thinking machine and the field of AI was formally founded at a conference in the summer of 1956 held at Dartmouth Collage. This formal founding led to a rush of new innovation and discovery in the field and several specialities within the discipline emerged such as Natural Language Processing and search based reasoning. Progress was also made on neural networks with the first perceptron based systems such as ADALINE and MADALINE developed at Stanford in the early 1960’s.

ADALINE (Adaptive Linear Element) was an early single layer neural network developed by Bernard Widrow. MADALINE (Many ADALINE) developed the original concept into a three layer neural network. Whilst these systems are a little different in their operational characteristics to modern perceptron based neural networks, they nonetheless were ground breaking at the time.

This early flush of success led some researchers in the field to become overly optimistic and many were claiming that there would be generally intelligent thinking machines within a decade. Some quotes from researchers at the time include

- H.A. Simon and A. Newell 1958: ‘within ten years a digital computer will be the world’s chess champion’

- H.A. Simon 1965: ‘machines will be capable, within twenty years, of doing any work a man can do’

- M. Minsky 1967: ‘Within a generation … the problem of creating artificial intelligence will substantially be solved’

- M. Minsky 1970: ‘In from three to eight years we will have a machine with the general intelligence of an average human being’

Clearly these predictions were wildly optimistic! It is interesting to note that even now in 2019 only one of these predictions has been fulfilled and that one related to the limited problem domain of Chess!

The First AI Winter

Valuable though these initial discoveries were, it became increasingly apparent as the 1970s wore on that the promise of intelligent thinking machines was not going to be realised any time soon. In retrospect it is clear that the compute horse power was just not available to enable this vision. Consequently, throughout 1970’s there was a general fall off of interest in AI and the major research funding dropped off. This became known as the first AI winter.

In the next post in this series, we will examine the false dawn of expert systems and the second AI winter.

About The Author

Peter Elger is the CEO of fourTheorem and co-author of AI as a Service – available through Manning Publications: https://www.manning.com/books/ai-as-a-service

Get in touch to share your thoughts.