Judging by the packed rooms, Machine Learning and Serverless are the two topics grabbing most of the attention at this year’s AWS Summit London. Julien Simon – Principal Evangelist, AI & ML at AWS, gave a comprehensive rundown of the machine learning capabilities of AWS.

While the adoption of machine learning technology has really taken off in the last five years, Julien reminded us that Amazon have been working in this space since their foundations in online book retail.

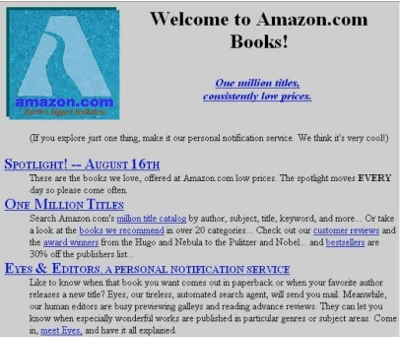

Even in 1995, personalised content was front and centre on Amazon.com. It was recognised early that personalising content and targeted recommendations were key to successful e-commerce. In the book, Two Decades of Recommender Systems at Amazon, it is mentioned that Amazon filed a patent in 1998 on machine learning recommendation systems.

Over the years, they have been consistently applying ML to different areas, such as the 50,000-strong army of autonomous robots working in Amazon fulfillment centers all over the world. Other recent examples include the Amazon Echo and Prime Air, Amazon’s drone delivery service, and Amazon Go, the AI-powered cashierless store in Seattle.

The stated goal of Julien and his team is to “put machine learning in the hands of every developer and data scientist”. As he describes every solution in the AWS Machine Learning stack, it’s clear to see how, as he reports, more AI/ML is built on AWS than anywhere else.

This stack is described in three layers – Application services, platform services and frameworks/infrastructure.

Application Services: High level services like Polly, Rekognition. These are API Services for applications like machine vision, language, chatbots. No ML skills are needed.

Platform Service: Lower-level custom machine learning for applications like cancer detection in medical images. SageMaker is an example of this.

Frameworks: Low level support through GPU instances and AMIs supporting MXNet or TensorFlow.

Application Services

Rekognition

Rekognition focuses on analyzing images and videos. It supports:

- Object and scene detection for understanding what is in an image.

- Facial analysis, used for finding people using face detection. For each face detected, a bounding box is returned along with attributes such as age range, gender, whether the person is wearing glasses, and the person’s emotion. Celebrity recognition is an interesting application of this feature which is to be used by Sky TV to detect celebrities present at the upcoming royal wedding.

- Face comparison. This is used for crowd detection or facial search, searching for faces in a large set of captured images.

- Image moderation, supported by classifying whether images have suggestive content that may indicate that content is innappropriate for a certain audience.

- Text in image detection, used for finding text such as license plates or documents.

Rekognition for Video

Rekognition for Video, launched at re:Invent last year, is for performing image recognition in live or archived videos. With Rekognition for Video, you can:

- Work on archives in batch mode or live streams using Kinesis Video Streams

- Perform activity detection, such as detecting whether a subject is running or falling

- Do object tracking, following and highlighting the position of a subject detected in a video.

Julien gave a nice demonstration of Rekognition for Video in action, including detecting the sunset in a video and detecting and tracing a person in a video sample where the quality of the person portion of the video was quite fuzzy. The use cases here cover public safety requirements. They City of Orlando is using Rekognition for detecting missing people and persons of interest. It can also be used to detect accidents. fires or floods and all using existing video capture infrastructure.

Polly

Amazon Polly is the text-to-speech service in AWS. With it, you can:

- Pass in a text in any of 25 supported languages

- Provide archives or live audio streams

- Alter speech pitch and rate

- Customize pronunciations of specific text. Eaxmples of this are custom ways of saying dates and phone numbers. For example, for a phone number containing 555, you might want Polly to generate the spoken audio for “triple-five”. This is all achieved using SSML.

It was interesting to learn that Duolingo uses Polly having A/B tested learning rates with other TTS providers. Students learned consistently better having heard the Polly-generated voice audio.

Translate

Amazon Polly is the real-time, deep learning-powered machine translation engine. It currently supports translation from English to six languages and back with six further languages to be added soon.

As an example of how powerful this can be, Hotels.com uses it to support 90 localised websites in 41 languages including 25M customer reviews.

Transcribe

Transcribe is the speech-to-text companion to Polly. It accepts MP3, WAV, FLAC audio files of spoken English or Spanish.

Compared to other cloud-based machine learning transcription we have seen in the past, it supports punctuation and formatting as well as timecodes. This is an advanced feature set that makes video captioning possible without having to jump through additional hoops. Another major benefit is its support for custom vocabulary since it’s rare to have an audio transcription requirement without suffering from problems with poor accuracy when it comes to domain-specific terminology.

Transcribe works with both high quality and low quality audio such as telephone audio.

Comprehend

Amazon Comprehend is the application service used for Natural Language Processing (NLP). By providing it with text, it will extract information on the meaning of the text as well as specific entities mentioned within the text. It can process emails, document, tweets and FaceBook posts. With one API call, it can extract entities such as the people or organizations mentioned in documents. Comprehend can also perform sentiment analysis, a useful feature for monitoring the perception of brands and topics on social media on in customer service communications.

Another nifty use of Comprehend is topic modeling.

Comprehend can take a large number of documents and derive a specified number of topics, classifying each document according to these topics. It is currently used by The Washington Post to categorize news articles. Every document is scored according to the topics derived. This data can then be used to perform extremely useful searches with highly relevant results.

Lex

Amazon Lex is the chatbot service in AWS. You can define a conversation flow and the pieces of information you want to extract from the user. For example, if you want to support hotel room bookings, you need to know where to book the room and for how many nights. These pieces of information are called “slots”. A conversational voice interface is designed using Lex. The result of the conversation can then trigger a Lambda request to fulfill the request, such as performing the room booking.

Julien described the case study of Liberty Mutual, a US insurance giant that has used Lex to build a bot to generate insurance quotes.

Platform Services

The set of platform services support by AWS is naturally a smaller number than with the application services. These services are however extremely powerful and customisable for bespoke machine learning jobs.

Elastic Map-Reduce (EMR)

EMR – Amazon’s Spark and Hadoop service, is is still widely used for data processing by many customers. Julien described the example of FINRA,a primary regulator for stock brokers in the US. They ingest large volumes of trades and run hundreds of surveillance algorithms. Since the market is closed at night, that’s when the processing happens, so they use elasticity of EMR to fire up tens of thousands of nodes.

SageMaker

The exciting recent arrival to this family is SageMaker. It is an end-to-end machine learning environment with zero setup. Its pay-by-the second model is hugely beneficial as you never have to overpay for training. It can be used to bring up need >50 instances in just a single API call. It is fully managed, scales automatically and allows you to focus on the machine learning job.

A neat feature of SageMaker is that it ships with built-in algorithms for a multitude of machine learning jobs.

It supports all the Python machine learning environments, and has full support for TensorFlow and MXNet.

SageMaker provides Jupyter notebook instances for experimentation and development with MXNet and TensorFlow built-in. As Julien said, it’s clearly the easiest way to quickly provide notebooks to a team of 50 people in your company.

An exciting development is that SageMaker now has hyperparameter optimization in preview mode. This feature uses machine learning to recommend the best set of hyperparameters for your machine learning algorithm.

When it comes to deployment, SageMaker allows you to import existing models from other sources, export a model, or deploy it directly to a production-ready, scalable HTTP endpoint.

The clear message form Julien on SageMaker is that it allows you to focus on your machine learning application, not on the plumbing.

EC2 Instances

The latest generation of compute-intensive instances is the C5 with the Intel Skylake Architecture. This includes the 512-bit instruction set architecture that makes a significant different to the linear algebra operations required for machine learning.

The P family of GPU machines uses Nvidia Tesla V100 GPUs. These instances deliver 1 PetaFLOPS of computation using 6000 cores (eight Nvidia GPUs are packed on one server).

Frameworks and Infrastructure

The lowest level in the AWS ML stack covers EC2 instances and AMI images.

Deep Learning AMIs

Amazon’s Deep Learning AMIs are preinstalled with everything you need for machine learning and data science including nvidia drivers, anaconda and deep Learning frameworks.

If you are interested in how fourTheorem can help you with Machine Learning on AWS, DevOps, cloud migration and architecture, please contact us and we’ll be happy to help.