Python is one of the two most popular languages for developing AWS Lambda Functions, according to the 2023 Datadog State of Serverless Report. When it comes to data science, statistics and machine learning workloads, Python is the undisputed leader. Yet, a frustrating limitation has previously made it difficult, and sometimes impossible, to deploy certain data science workloads in AWS Lambda. 250 MB Package size limits in Lambda have been at odds with the heavy nature of Python data science modules like NumPy and Pandas. This post is going to dive deep into the problem, give multiple options for addressing it, and present performance benchmarks for these workloads. By the end of this article, you can see that, while there are some trade-offs to consider, container image packaging can solve this problem and even provide superior performance! You can also check out the complete source code for our analysis on GitHub. The topics discussed are primarily aimed at Python developers but should be of interest to anyone thinking about AWS Lambda performance. Let’s dive in!

Why run Python Data Science on Lambda?

There are plenty of options for running data science on AWS, including AWS Glue and EMR as well as Notebook options in SageMaker, Glue and EMR. In addition, you can use any compute service like EC2 or ECS. Lambda is best suited to two classes of workloads – those that have an infrequent or bursty execution pattern, and those that need a lot of concurrency very quickly. Lambda executions can start faster than any alternative (less than one second) and you can run thousands and even tens of thousands of concurrent tasks in seconds. Unlike distributed data science frameworks like Spark, Ray and Dask, Lambda is not suitable for highly-coupled executions, where each concurrent process needs to communicate with each other or through a central node for orchestration or to shuffle data around. Instead, Lambda function executions are isolated and highly parallelisable. This can be a great fit for many use cases:

- Data analytics for real-time data feeds from IoT devices, database CDC (change data capture), or Webhooks

- Performing event-driven ETL, data cleansing and other transformation on data as it arrives in S3

- Machine learning inference and statistical analysis of tabular or image data

- Automated reporting to aggregate data and drive reports and dashboards

It’s also important to note that Lambda Functions are well suited to workloads that are integrated with other components on AWS, like queues, event buses, data producers, Step Functions and APIs. If your workload is an ad-hoc workload that is run by analysts in isolation, you’re probably better off using a Notebook/JupyterLab environment or using an orchestrator like Airflow or Dagster. Lambda Functions can run for up to 15 minutes and use up to 10GiB of RAM. If you have long running or memory-hungry analytics, you’ll need to do a bit more setup and leverage something like Fargate or AppRunner.

If Lambda does suit your workload, you can avoid a lot of infrastructure setup, avoid paying when you are not using it, and get the kind of scalability that is otherwise rare in commodity cloud computing.

Facing Size Limits

Let’s assume a typical Python data science workload imports the following packages and we’ll take a look at the package size by pip installing the latest version on Linux.

| boto3 | 1.5M |

| aws_lambda_powertools | 4.6M |

| botocore | 24M |

| numpy | 70M |

| pandas | 62M |

| pyarrow | 125M |

| Total | 287M |

|---|

The total storage requirement (287M) is already in excess of Lambda’s limit of 250M for all unzipped packages. While your use case may not require these exact packages, it’s likely you will have additional packages, either from internal company modules or other third-party modules not included here, like scikit-learn (46M), scipy (86M) and matplotlib (29M).

The challenge of fitting heavy dependencies into Lambda ZIP packages is frequently encountered. Luckily, there are a few solutions.

- Lambda supports two methods to package and deploy code and dependencies – ZIP and Container Images. Using container image packaging allows you to take advantage of a 10GB package size, 40 times greater than allowed for ZIP packaged functions.

- Strip non-essential parts of packages before deployment. This includes stripping debug symbols from shared libraries (

.so), removing unit tests and documentation and removing the.pycprecompiled bytecode. Implementation varies depending on the tooling you use to deploy Lambda Functions:- Serverless Framework users can use the serverless-python-requirements or the sls-py plugin to do this for you.

- AWS SAM has no built-in support for stripping, but you can either remove and strip files in your deployment script after the

sam buildand beforesam package, or you can use a Makefile builder as described here. - CDK allows you to use custom build commands to bundle the assets, where you can perform any stripping of dependencies. We provide an example here.

- Use pre-built, stripped Lambda Layers for dependencies. Lambda Layers are a method for you to package common libraries together for reuse across multiple functions. They don’t solve the problem per se, since they don’t give you any increase in the storage limit – the 250MB limit applies to the unzipped total of all ZIP packages including layers. However, layer providers often go to the trouble of stripping and minimising packages so you don’t have to. The AWS SDK for Pandas project provides a single layer that includes Pandas, Numpy, PyArrow and boto3. Taking a look the build script for this layer shows how PyArrow is built from source with non-essential options turned off and all packages are stripped of debug symbols and precompiled bytecode.

Pros and Cons of Lambda Packaging Methods

Each deployment method has tradeoffs, so let’s clarify the pros and cons so you can make the best decision for your context.

ZIP Packaging Benefits and Limits

If possible, ZIP packaging is the recommended way to deploy Lambda Functions. The main reason for this is that it is the only method to ensure that AWS remains responsible for the maintenance and security of the runtime. You are responsible for maintenance and security of the code and dependencies you deploy, but AWS remains in charge of the operating system and the Python runtime.

On the other hand, ZIP-based deployment has a few drawbacks.

- The 250MB unzipped package size limit we already mentioned.

- Tooling that targets the ZIP format usually does not have an easy way to rebuild partial changes when you are deploying frequently in a development environment, so this approach can be slower than image-based deployment for developer feedback. Tools like sls-py and the serverless-python-requirements for The Serverless Framework address this by putting dependencies into layers.

Another trick to squeeze more into the 250MB is to double zip your packages and let Python load them using the zip imports feature. This double-compression method may increase the packaging and cold start time of your functions, but it is worth measuring for your use case. We did not evaluate this method in our benchmarks.

Using Lambda Layers for Deployment Optimisation

Using Lambda Layers along with ZIP-packaged deployment can speed up deployment when you are only changing the function’s code and not its dependencies. If underlying module dependencies haven’t changed, the layer does not have to be redeployed. This can result in a much faster developer feedback cycle. Deployment time optimisation is the primary advantage of Lambda Layers. For Python data science projects, Layers have the additional benefit of allowing you to use minimised modules provided by a third party. We have already mentioned the optimised build of AWS SDK for Pandas, where AWS and open source contributors have already gone to the trouble of bundling stripped-down versions of Pandas, NumPy and PyArrow. Be cautious of the tradeoff here, however. Third party layers will version specific versions of these dependencies, and they may not be the versions that you would choose. You may also have to wait for the layer provider to upgrade to the latest version. It is also unfortunate that Lambda Layers have no semantic versioning support, a feature that is widely adopted for managing dependency versions in almost every popular package manager.

Container Images to the Rescue?

Container image packaging gives you more control over the full set of deployed artefacts, but leave you with more maintenance and security responsibility. Even though you are using container images, you are still running the code in AWS Lambda. Lambda is not a fully-fledged OCI-compatible container environment like ECS, Fargate or Kubernetes. Container standards are only used by AWS Lambda for deployment, not for execution. A key advantage of image deployments is the use of mature container image build and deployment tools, such as Docker and its successors like Podman and Amazon Finch. Container images are composed of Layers, and the build and deployment process prevents layers from being rebuilt if their contents have not changed. This benefit can be realised further by using shared base images across teams. These optimisations make the development and operations experience better for the many users already familiar with this ecosystem. A shared base image also allows you to have clearly pinned versions of dependencies. Dependency version pinning can be critical in the data science world to ensure consistency and accuracy when you are producing numerical results.

The fact that you are taking on a bigger chunk of the shared responsibility is less of a factor if you are already making significant use of containers elsewhere and need to invest in container image security anyway. The 10GB deployment size of containers is a big advantage. It easily allows us to ship our heavy data science modules with room to spare for model data and more if required. Be aware that you are charged for all cold start time in image packaged functions. This is not the case for cold start time for ZIP packaged functions unless you are using a custom runtime, a case not covered here.

We frequently hear from customers who resist container image deployment because of a perceived performance disadvantage. The story goes that Lambda Cold Starts are significantly worse with container images than with ZIP packages. The hypothesis makes intuitive sense if you imagine the work required to deploy 10GB of code instead of 250MB. However, we decided to put this to the test and benchmark cold start performance of an sample data science Lambda Function in Python using three packaging methods – ZIP, ZIP with Layers and Container Images.

Measuring Cold Starts

When a Lambda Function executes, it runs in a sandbox, a single execution of the function that will serve a single request at a time. If a request arrives and there is no existing, operational sandbox ready to fulfil the request, the Lambda service must start a new sandbox and load your code, including anything declared outside the handler function block itself. This all occurs in what is known as either the INIT phase or the Cold Start phase. If this phase takes significantly longer than the execution time of your handler, optimising its performance can be important. This is certainly not the case for every workload. Many asynchronous, background processing jobs will not be impacted in any meaningful way by cold starts. Even for latency-sensitive, synchronous requests, cold starts can be a small proportion of executions. Should you care about cold starts? As always, it depends. It’s always a good idea to deploy your workload, test it under meaningful load, and measure. Any time spent optimising cold starts before you do this falls under the category of Premature Optimisation, famously and hyperbolically classified by Donald Knuth as the “root of all evil”. Knuth also warned against complacency in the area of the “critical 3%”, so let’s measure a sufficiently illustrative example so you can focus on the 97%!

The methodology used to benchmark cold starts for different packaging methods was as follows.

- Create a Lambda Function for each packaging method using AWS CDK.

- Add module load time instrumentation to the function’s code. This allows us to measure the load time for each Python dependency and understand its impact in overall cold start performance

- Create four different copies of each function with varying memory configurations.

- Deploy the function to an AWS region where the workload has never been deployed before, our “cleanroom environment”, mitigating against any caching side-effects from previous runs.

- Execute each function 2,000 times in an asynchronous fashion, resulting in a mix of cold and warm starts for each.

- After a period of hours, run the same workload again

- Plot the cold start times recorded in the

REPORTlines of every Lambda Function’s logs, where theInitDurationis recorded with millisecond granularity.InitDurationis only present in the case of cold starts, so warm start invocations are not picked up. In keeping with the theme, this analysis is performed using Pandas and Matplotlib in a Jupyter Lab environment.

All code used in this exercise, along with instructions for deployment and running is available on GitHub. The repository includes a Jupyter Notebook for generating these visualisations as well as a complementary CloudWatch dashboard with many other useful visualisations.

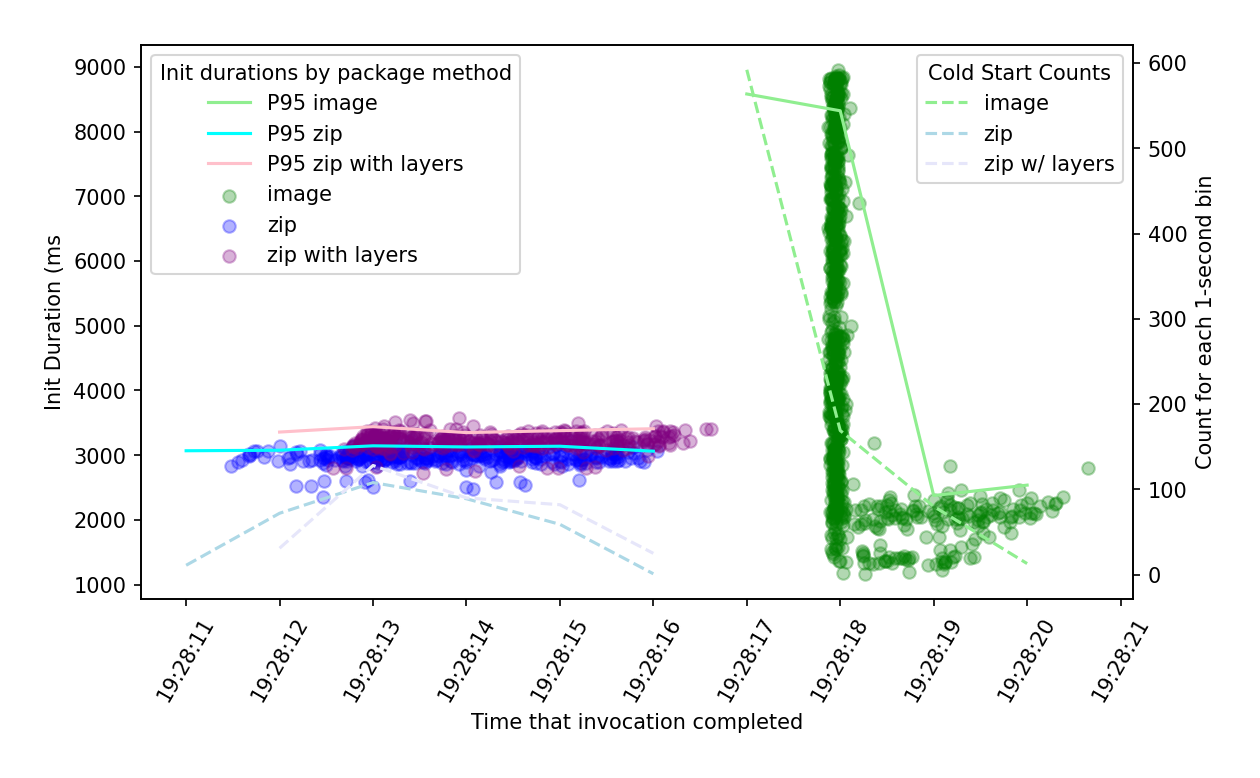

Initial Invocations

In the first 2,000 executions of each function, we see a remarkable difference between image cold starts and ZIP-packaged. In fact, all ZIP-packaged function executions were complete before the first image-packaged function was warm. 95% of image-based functions were taking up to 9 seconds to warm up. Ouch! It’s no wonder that there’s a common perception that cold starts are worse with image deployments. It’s interesting to see that while ZIP-based functions using layers were closer in performance to those using layers than image-based functions, the cold start is about 10% worse with the usage of layers in this case.

Measuring performance with a second batch

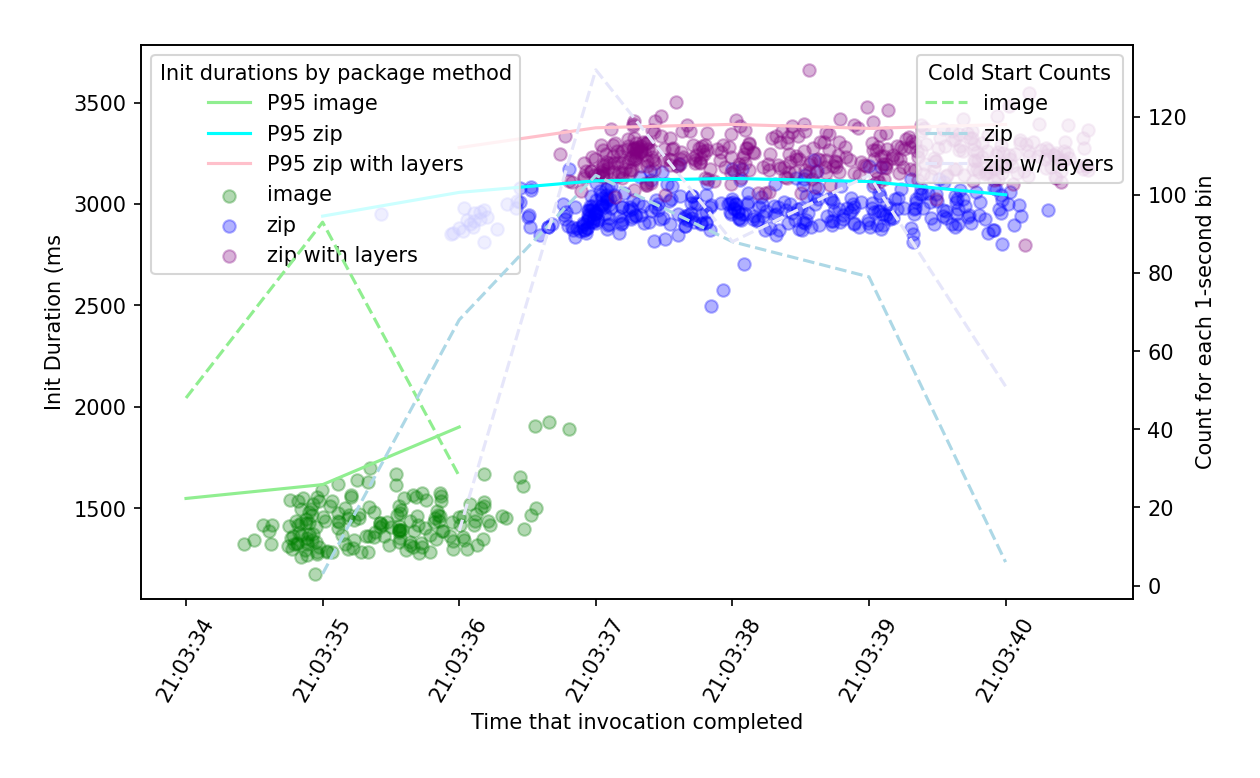

90 minutes later, we try again.

Wait, what? The picture has completely flipped! ZIP-based functions exhibit a cold start similar to the previous plot. Image-based functions, however, have jumped way down from the 9 second range to sub-2 seconds!

Let’s sleep on it and try again in the morning. A fresh start and a well-rested mind will clear this up. 😴

Sanity checking our results with a third batch

Good morning ☀️ It’s now the day after, and we have had our morning coffee. Ah yes, that’s the stuff.

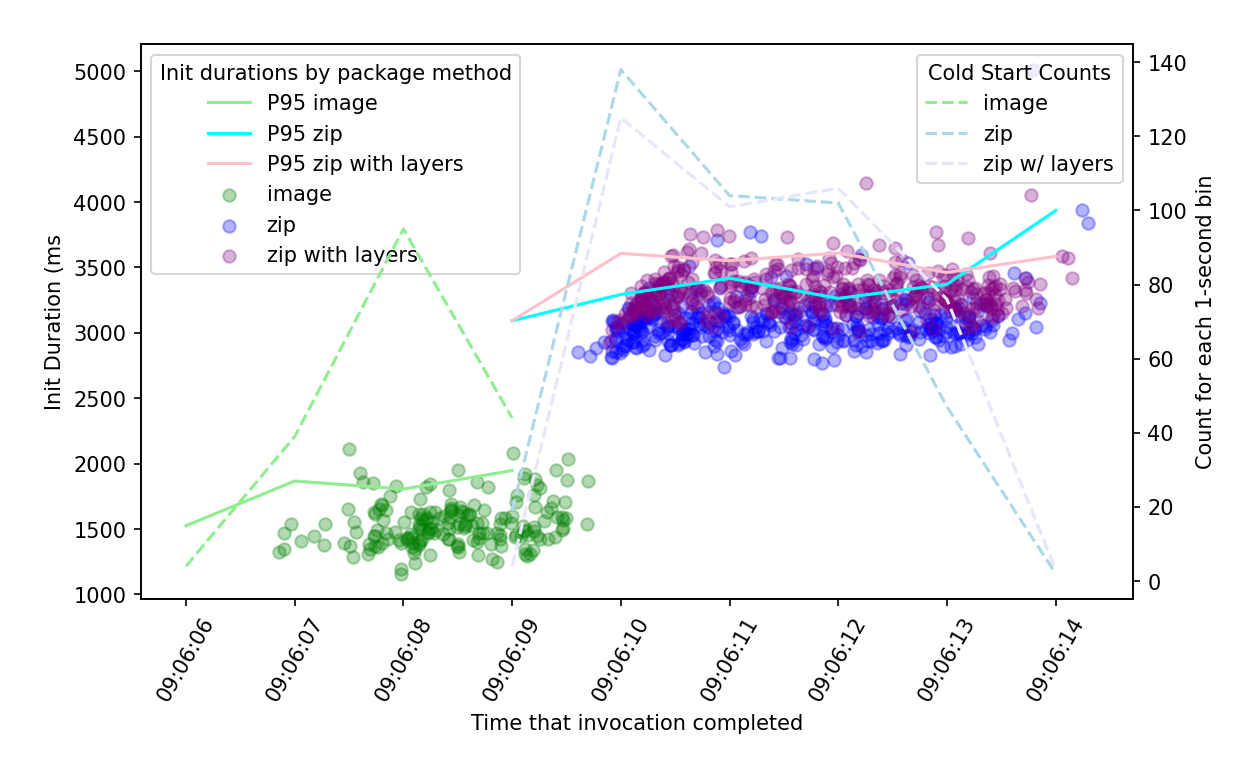

Let’s trigger another 2,000 fresh executions – our third run.

A day after we started benchmarking, we are still witnessing sub-2-second cold starts for image-packaged functions while ZIP-packaged functions remain in the 3-4 second range. We have seen this across multiple regions during our tests. What could be the reason for the performance difference?

Why are cold starts faster for Container Image deployments?

What can explain the reason for container image function cold starts being better than ZIP-packaged images (at least after the first execution batch)? The answer is outlined in the paper, On-demand Container Loading in AWS Lambda (Marc Brooker, Mike Danilov, Chris Greenwood, Phil Piwonka) describing the extensive optimisations that we avail of when using Lambda’s container image deployment method.

What’s the gist of this paper?

- When you deploy a container image function, the filesystem is flattened and each file is chunked into 512KiB blocks.

- Container image deployments use number of tiered caches that identify reused files across image, including files used in multiple customers’ images. Blocks are encrypted in such a way that your code remains secure but cached chunks that are common among multiple AWS customers can still be reused. This can eliminate the need to load most of a container image’s bytes from S3.

- Caches exist in each Lambda worker, then in availability zones. If chunks are not found in either of these caches, they are retrieved from S3. In production testing, this paper reports cache hit rates of 65% in the worker cache and more than 99% in the AZ cache.

- Even though a container image may be up to 10GB, many files are never loaded. The block-level virtual filesystem used takes advantage of this, only loading files based on I/O requests.

This design seems to match with our benchmarks. Cold start times for images are slower after initial deployment as caches are primed but significantly faster thereafter. As long as container images do not change dramatically on subsequent deployments, cache hit rates should still remain high and cold starts should be low. As long as you can bear the near-10-second overhead for the initial period following first deployment, there should be no reason to reject container image deployments based on cold start performance.

Does memory size help?

With AWS Lambda, you can configure your function’s memory availability with values ranging from 256MB to 10GB. The amount of CPU allocated is directly proportional to the memory configured. The same proportional allocation applies to network and disk I/O. 1,769MB of memory equates to 1 vCPU. It follows that an increased memory allocation may improve module and container load performance. Of course, the cost of AWS Lambda executions is also directly proportional to the memory allocated, so we want to keep the allocation as low as is required for handler performance. Let’s first test our assumption – does increasing the memory improve cold start performance? Let’s look at the average cold start duration for each memory configuration across all invocations in our test.

| InitDuration (ms) | ||

|---|---|---|

| PackageMethod | MemCfg | |

| image | 1024.0 | 3559.0 |

| 1769.0 | 3602.0 | |

| 3538.0 | 3591.0 | |

| 10240.0 | 3536.0 | |

| zip | 1024.0 | 3010.0 |

| 1769.0 | 3008.0 | |

| 3538.0 | 2986.0 | |

| 10240.0 | 3007.0 | |

| zip_layers | 1024.0 | 3264.0 |

| 1769.0 | 3249.0 | |

| 3538.0 | 3252.0 | |

| 10240.0 | 3270.0 |

Memory size does not impact cold start performance in any obvious way. While this might be surprising, it’s less so when you consider that every function gets 2 vCPUs during the cold start (INIT) phase, regardless of the memory configuration. The workload under test in our example had an average runtime duration of approximately 100ms, and this was also not significantly impacted by memory allocation. Cold start time was far greater.

Module Load Time

While performing this exercise, we were interested in understanding how the load time for Python modules was impacting cold start, separating these timings from the load time for the runtime and the rest of the code. To understand this, we modified the Python imports to use __import__, logging the load time for each as a metric. Here, we present the P95 load times for each module (in milliseconds) with the total time in seconds. As well as measuring the module load time, we included separate metrics for the initialisation time of the Powertools for AWS Lambda module and the boto3 S3 client (powertools_init_ms and boto3_init_ms respectively).

Note: Loading pyarrow.parquet inherently loads pyarrow. That’s the reason we see near-zero load times for pyarrow.

| pkg_method | mem_cfg | hour | pandas_ms | numpy_ms | pyarrow_ms | parquet_ms | boto3_ms | powertools_ms | powertools_init_ms | boto3_init_ms | total_s |

|---|---|---|---|---|---|---|---|---|---|---|---|

| image | 1024.0 | 2023-11-27 19:00:00 | 2523.0 | 338.0 | 1.0 | 422.0 | 252.0 | 282.0 | 188.0 | 171.0 | 4.1732 |

| image | 1024.0 | 2023-11-27 21:00:00 | 903.0 | 267.0 | 1.0 | 82.0 | 249.0 | 47.0 | 54.0 | 156.0 | 1.7545 |

| image | 1024.0 | 2023-11-28 7:00:00 | 518.0 | 185.0 | 1.0 | 53.0 | 206.0 | 28.0 | 47.0 | 141.0 | 1.1751 |

| image | 1024.0 | 2023-11-28 8:00:00 | 649.0 | 217.0 | 1.0 | 60.0 | 265.0 | 32.0 | 47.0 | 151.0 | 1.4164 |

| image | 1024.0 | 2023-11-28 9:00:00 | 588.0 | 217.0 | 1.0 | 59.0 | 236.0 | 37.0 | 55.0 | 158.0 | 1.3472 |

| zip | 1024.0 | 2023-11-27 19:00:00 | 1834.0 | 545.0 | 1.0 | 120.0 | 227.0 | 61.0 | 76.0 | 174.0 | 3.0349 |

| zip | 1024.0 | 2023-11-27 21:00:00 | 1777.0 | 522.0 | 1.0 | 119.0 | 223.0 | 60.0 | 72.0 | 160.0 | 2.9316 |

| zip | 1024.0 | 2023-11-28 7:00:00 | 1733.0 | 522.0 | 1.0 | 114.0 | 216.0 | 58.0 | 75.0 | 154.0 | 2.8696 |

| zip | 1024.0 | 2023-11-28 8:00:00 | 1804.0 | 540.0 | 1.0 | 122.0 | 247.0 | 63.0 | 73.0 | 162.0 | 3.0074 |

| zip | 1024.0 | 2023-11-28 9:00:00 | 2139.0 | 642.0 | 1.0 | 143.0 | 342.0 | 69.0 | 97.0 | 224.0 | 3.6529 |

| zip_layers | 1024.0 | 2023-11-27 19:00:00 | 1759.0 | 561.0 | 1.0 | 42.0 | 253.0 | 69.0 | 337.0 | 246.0 | 3.2626 |

| zip_layers | 1024.0 | 2023-11-27 21:00:00 | 1745.0 | 584.0 | 1.0 | 39.0 | 261.0 | 65.0 | 320.0 | 226.0 | 3.2377 |

| zip_layers | 1024.0 | 2023-11-28 7:00:00 | 1748.0 | 547.0 | 1.0 | 39.0 | 220.0 | 67.0 | 345.0 | 230.0 | 3.192 |

| zip_layers | 1024.0 | 2023-11-28 8:00:00 | 1748.0 | 547.0 | 1.0 | 42.0 | 234.0 | 67.0 | 338.0 | 233.0 | 3.2061 |

| zip_layers | 1024.0 | 2023-11-28 9:00:00 | 1892.0 | 592.0 | 1.0 | 41.0 | 270.0 | 66.0 | 377.0 | 284.0 | 3.5185 |

This shows us that the module load time in Python is significant. Again, initial load times for container image deployments are high – over 4.5 seconds in total, with 3 seconds of that accounting for Pandas alone. Subsequent invocations show a big improvement in module load time (below two seconds) while ZIP-packaged functions exhibit a stable module load time of around 3-4 seconds in total.

We checked separately whether varying memory configurations for each function between 1,024 MB and 10,240 MB had any impact on module load time and again, it did not. It is better to choose your memory configuration based on cost vs. runtime performance rather than considering cold start performance at all.

Module load times are a significant contributor to overall cold start times. What does this tell us? For one, it can tell us to be careful of importing unnecessary dependencies. Python does not allow us to benefit from very fine-grained code importing as is the case with the Node.js runtime, where we can tree-shake and bundle reachable code only. Another conclusion we can draw is that SnapStart, the new feature for Lambda currently only available for Java, could dramatically improve Python data science workloads. Python support for SnapStart could allow us to load modules only at deployment time, and cold starts would only have to load the checkpointed state of the pre-loaded Python environment from memory. Read more about SnapStart in the documentation.

Which packaging method should I use?

Image-based Lambda Functions are not the cold start menace we might have predicted. In fact, performance is significantly better over the long term. That said, if your code easily fits in a ZIP-packaged function, it’s probably still the best default starting option. You will have less maintenance and security surface area to consider in the long term. However, once you find issues with fitting all modules in a ZIP package, moving to container images will give you great performance and scalability. Just be mindful that you need to keep your runtime and dependencies up to date to stay secure.

Lastly, once you have your workload up and running, measure and adapt if necessary. There are so many factors at play that it is generally difficult to make correct assumptions about the tradeoffs between developer experience, performance and cost. The code example provided shows that you can quite easily use CloudWatch metrics and Logs to gain quick insights into quantitative drivers for your ultimate decision.

If you have any questions or comments, don’t hesitate to reach out on LinkedIn or Twitter ✉️

A huge thanks to David Lynam, Mark Hodnett, Diren Akkoc and Marin Bivol for their contributions to this article!

References

- Companion source code for this article: https://github.com/fourTheorem/lambda-datasci-perf

- The State of Serverless, Datadog: https://www.datadoghq.com/state-of-serverless/

- 10 Best Data Science Programming Languages to Know In 2023, United States Data Science Institute: https://www.usdsi.org/data-science-insights/10-best-data-science-programming-languages-to-know-in-2023#:~:text=1,packages%20to%20execute%20data

- Lambda Cold Starts and Bootstrap Code by Luc van Donkersgoed: https://lucvandonkersgoed.com/2022/04/08/lambda-cold-starts-and-bootstrap-code/#bootstrap-code-gets-more-cpu-power

- When is the Lambda Init Phase Free, and when is it Billed? by Luc van Donkersgoed: https://lucvandonkersgoed.com/2022/04/09/when-is-the-lambda-init-phase-free-and-when-is-it-billed/

- On-demand Container Loading in AWS Lambda, Marc Brooker, Mike Danilov, Chris Greenwood, Phil Piwonka: https://arxiv.org/abs/2305.13162