VPC Lattice simplifies API communication between services or microservices in one or more AWS accounts. It dispenses with the complexity and organisational problems often associated with routing tables, VPC Peering and Transit Gateway. Here, we’ll give a complete overview of VPC Lattice based on our experience since it was first launched in March 2023. We will present a fictional eCommerce scenario with a full code example so you can see how it really works in practice. This post is aimed at AWS networking administration teams and development teams alike. By the end, you should have all the knowledge and reference material required to go all-in on VPC Lattice!

Before we dive deep into the sample application architecture and code (available on GitHub!), let’s first cover off all of the concepts, rationale and benefits of Lattice.

What is VPC Lattice?

Lattice is a service that is designed to make inbound and outbound east-west connectivity between services and applications possible with a zero trust approach to authorisation. East-west connectivity refer to communication between servers or services. It differs from north-south connectivity, which is concerned with communication between servers and clients, or between servers and an external entity. VPC Lattice is more concerned with this “horizontal” connectivity and not with public-facing APIs. Zero trust relies less on where you are in the network, and more on strong, find grained authorisation, where every request must be authenticated with permission policies applied.

Lattice works in single or multiple AWS accounts, and primarily aims to minimise the amount of network configuration. In doing so, it intends to solve the common friction that arises when the responsibility for application networking is split between development and networking teams. Approaches like routing tables, VPC Peering, PrivateLink and Transit Gateway are the existing alternatives to Lattice. With those options, development and admin teams would usually have a significant back-and-forth every time you need to set up or alter this inter-application networking. Lattice provides separate resources geared towards the two team types, and also provides a number of distinct advantages:

- You never have any issues with overlapping IP ranges and CIDR blocks

- Zero-trust authorisation with IAM is supported for both teams, as well as typical Security Group support

- Lattice doesn’t use any IP addresses or ENIs in your network

- No proxy, service mesh, or container sidecars are required

- It provides integration with instances, containers and Lambda Functions

- Lattice incorporates load balancing, including rule-based HTTP request routing and traffic shifting

- Custom DNS is supported

- Centralised logging and auditing of traffic is very simple and effective

What is it good for?

VPC Lattice can be used for HTTP and gRPC APIs between microservices, services or applications. It also enables you with a simple way to provide many private APIs with an AWS organisation, with custom DNS, scalability and security.

One of the features of Lattice is the ability to migrate from different compute backends to another as part of a technology migration. It could serve as part of a migration from on-premises, instances, or containers to ECS, EKS or Lambda.

Lattice supports integration with:

- Kubernetes, including EKS

- ECS on EC2 or Fargate

- Lambda

- Anything else with an IP address

Lattice is a HTTP-only service, since it works at the application layer (HTTP) as well as the network layer (IP). While it does support gRPC, that is only over HTTP.

Fundamental Lattice Concepts

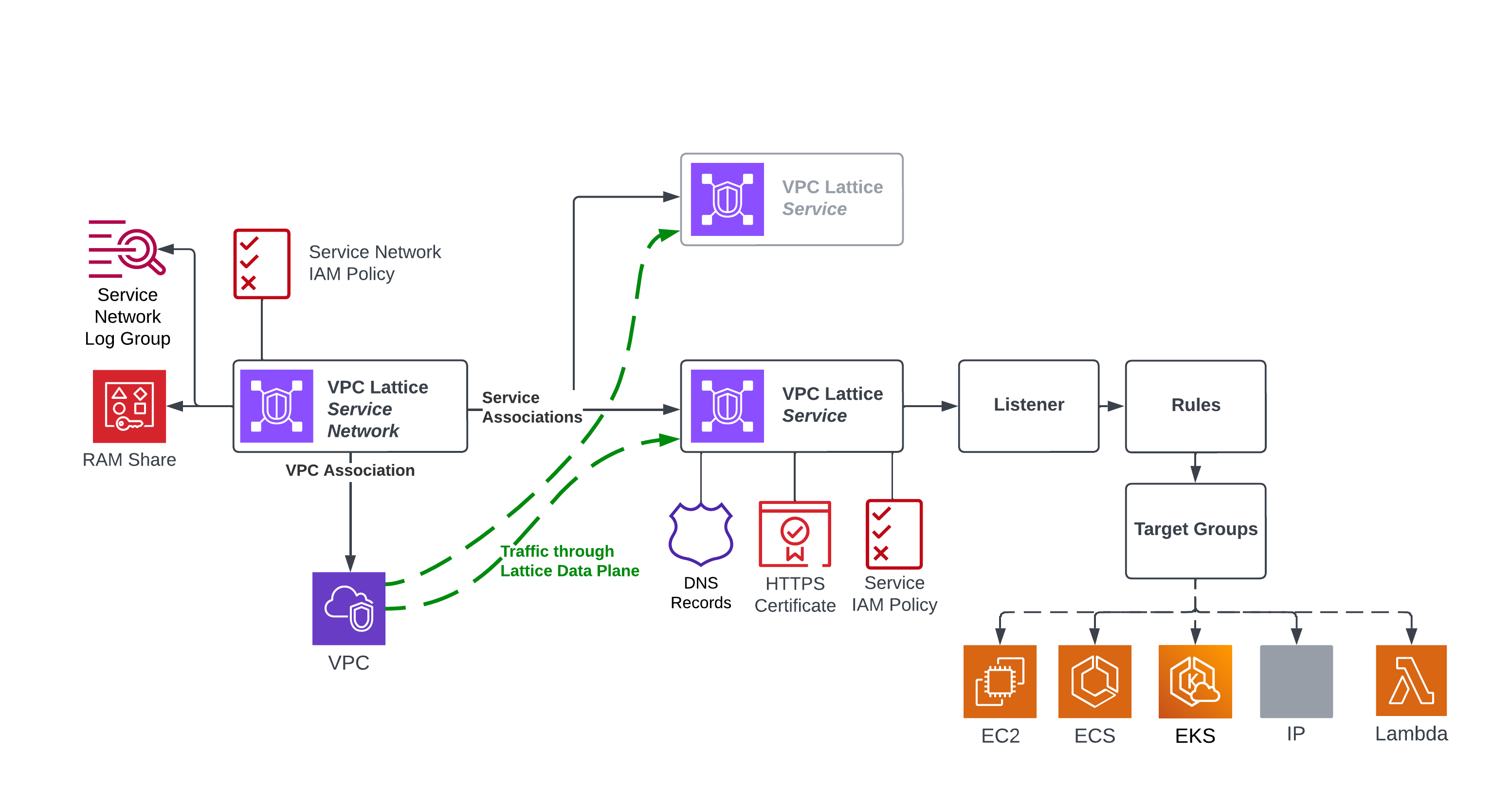

The two main concepts with VPC Lattice are the Service Network and the Service. Within the Service, you have a further set of resources which are very familiar to anyone who has used Application Load Balancers (ALBs).

Service Network

A Service Network is a logical grouping for related Lattice services and VPC. This part is typically governed by the admin team, where they can enforce coarse-grained authorisation and network security. A Service Network can be shared with other teams’ accounts using Resource Access Manager (RAM) so they can make services available on it or consume services from it.

Service

The VPC Lattice Service is the fundamental building block and is something that is backed by the supported target types; IP, Lambda, ECS or Kubernetes. A Service is typically owned by the development team. It’s possible for teams to create services and manage them without any of the usual IP network setup. The IP range can be anything sufficient for the service itself to scale. It doesn’t matter if it overlaps with other teams’ ranges. With a service, the team can also put in place finer-grained security policies with IAM. It is also possible for the service itself to be shared with RAM, so you have options in who gets control to associate the service to the service network.

Listener

A listener is a load-balancer concept within a Service. You set the port and protocol (HTTP or HTTPS). Then, you can create prioritised rules with path, header or method-based routing to direct traffic to targets.

Target Groups

Within a listener, Target Groups represent the service that actually serve the requests, including Lambda Functions, servers with an IP address, EC2 instances, or Application Load Balancers. Kubernetes deployments using VPC Lattice can use the Gateway Controller to automatically register the Service Network, Service and IP-based targets. ECS deployments, on the other hand, use Application Load Balancer targets. This represents a significant difference between EKS and ECS. While Application Load Balancers are already well integrated to ECS, it seems like extract cost and maintenance overhead to have both the VPC Lattice Listeners and Target Groups as well as the ALB Listeners and Target Groups. I hope we can see a more direct integration between ECS and Lattice in the future!

Authorisation Policies

Authorisation Policies are IAM resource-based policies that can be associated with either a Service Network, a Service, or both. The Service Network policy is usually coarser grained. For example, you might allow traffic from principals that are in the AWS Organisation. Finer-grained control can them be left to individual services, where you might use IAM Conditions to restrict access to principals with a specific project tag.

Discovery, Resolution and Routing

Since we don’t have any IP routing setup with Lattice, you might ask how traffic gets from the API consumer to the provider and back! This is where the magic of Lattice is revealed.

Every service gets a generated URL. This is a public URL. If you want to use custom DNS, you can specify the domain and HTTPS certificate in the Lattice Service and then create a CNAME which resolves to the generated domain from Lattice. When these domains are resolved, the value is a Link-Local IP address starting with 169.254. This is a special IP range that is not routable and only available on hosts in the VPC.

This IP is like a secret door through the AWS network that uses a special VPC Lattice Data Plane to manage traffic from the consumer to the provider.

In order to consume a Lattice service, your consumer must have access to a VPC that is associated with the Lattice Service Network. This is something that you can set up when the service is first deployed. Once that is done, your consumer can use the DNS name to ‘discover’ the service and all traffic is transparently routed through those special link-local IPs.

While the generated DNS name is public, the resolved value is useless outside of the VPCs that are associated with the Service Network. This approach take by Lattice eliminates the need for service meshes and sidecars, nicely simplifying the setup and configuration overhead.

While consumers must be in a VPC, you don’t actually have to be in a VPC to provide a service to other consumers. We’ll cover this a bit more in the section on Lambda and Lattice.

Request Signing

While IAM authorisation policies are optional in both the Service Network and in Services, most teams are likely to take advantage of them to provide defence in depth. This means that, like all IAM scenarios, requests need to be signed. The IAM principal involved needs to have the right VPC Lattice permissions (like vpc-lattice:Invoke) and the signature should be valid for the service scope (vpc-lattice-svcs) and the target region.

Of course, this feels like a bit of a leak in the abstraction, since consumers need to be aware that the API they are using is provided by Lattice and ensure that:

- Their VPC is associated with the Lattice Service Network

- The IAM principal, e.g., an IAM Role, has permissions for the

vpc-lattice:Invokeaction - The request is signed using AWS SigV4 in a way that works with Lattice.

In the normal case, requests sent to AWS services need the payload to be signed as part of the SigV4 signature. This can incur a performance penalty if the payload size is large. Since VPC Lattice services can have large payloads, Lattice does not support payload signing. Since this is the case the x-amz-content-hash: UNSIGNED-PAYLOAD request header must be present when sending your signed request.

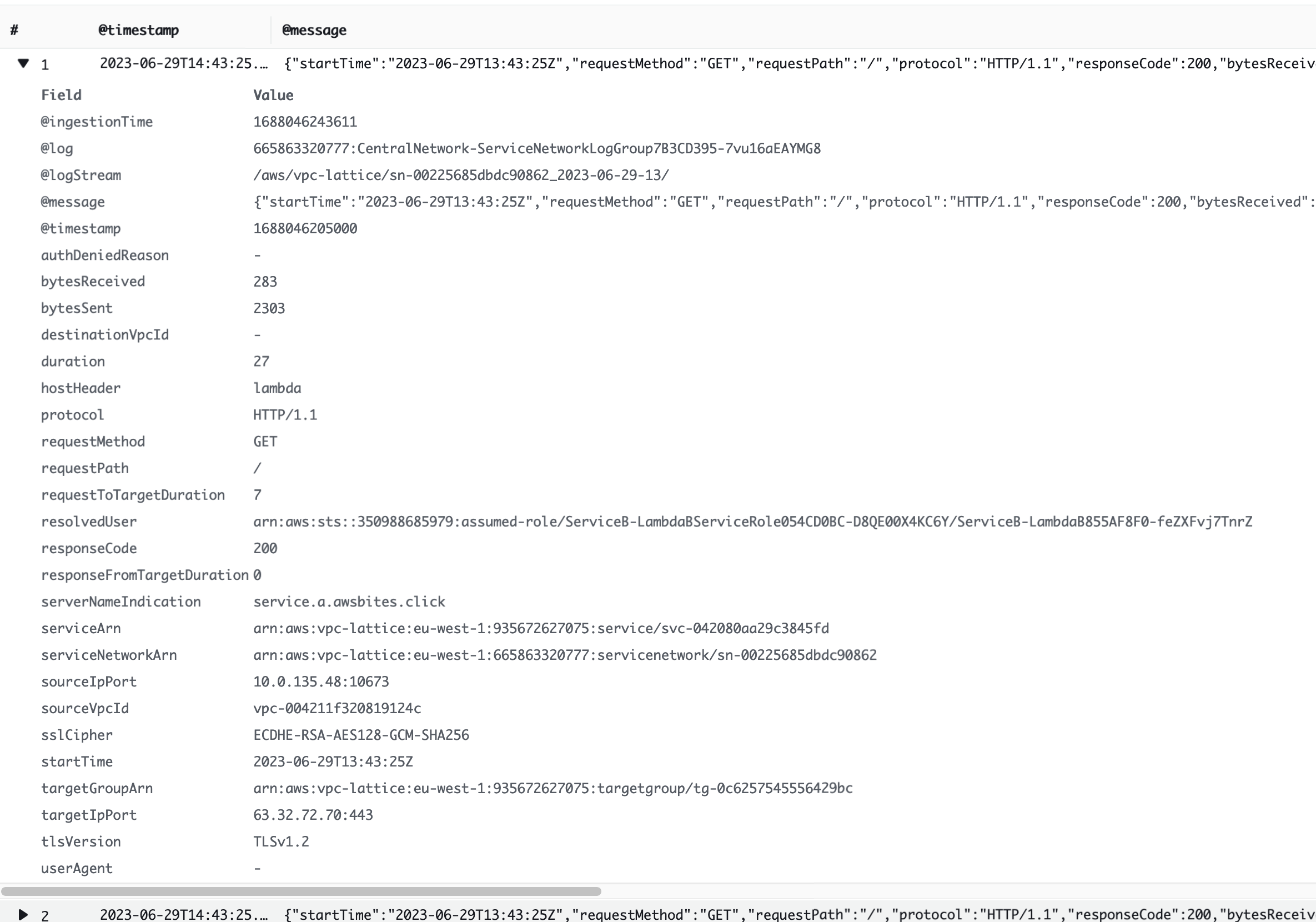

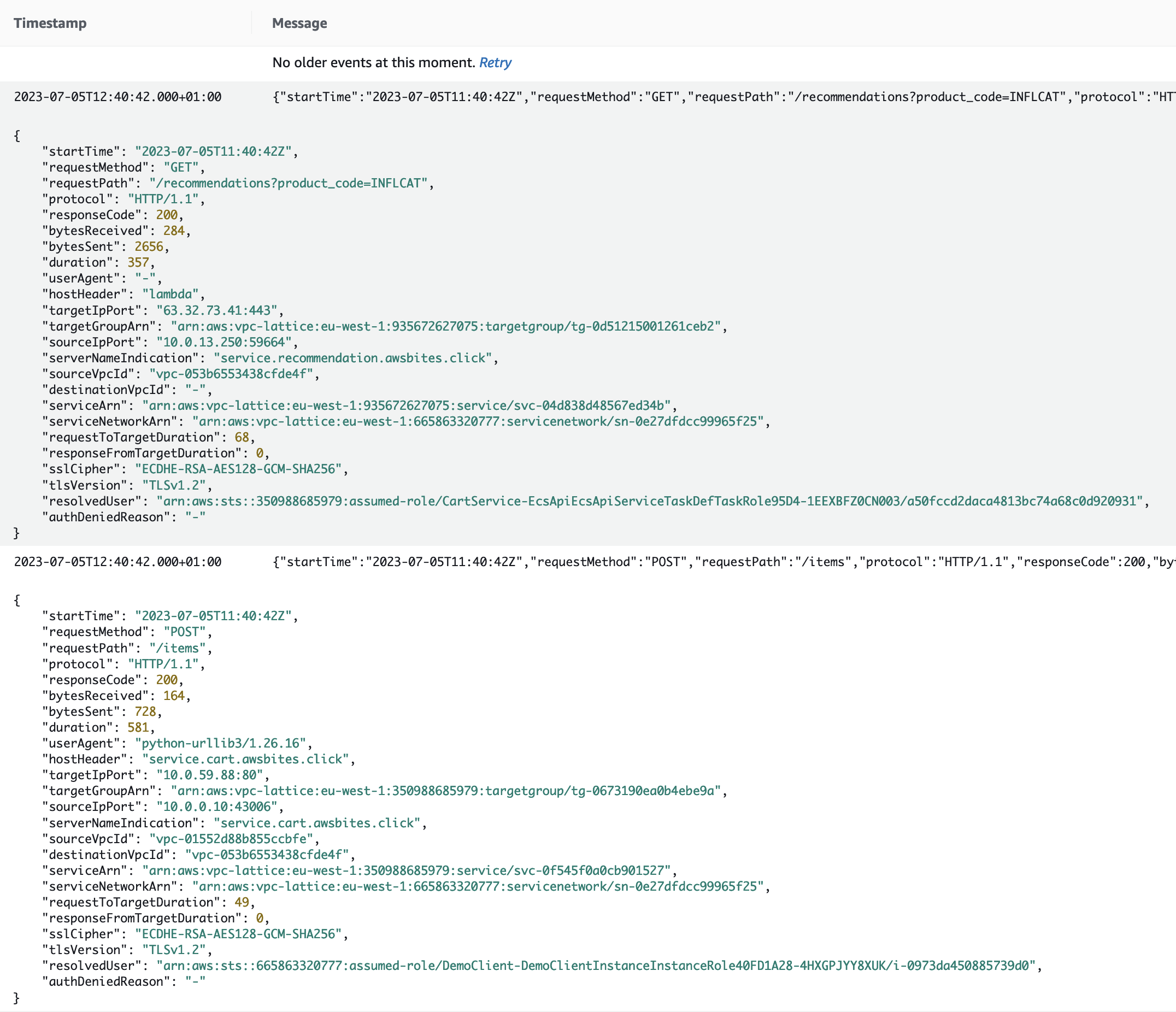

Monitoring - Lattice’s Killer Feature!

Networking teams are likely to be most excited by the simple centralised logs available for Lattice Service Networks. All traffic in the service network can be logged in a single CloudWatch Log Group. There, you can see the request, the IAM principal, IP address, service, service network and target group.

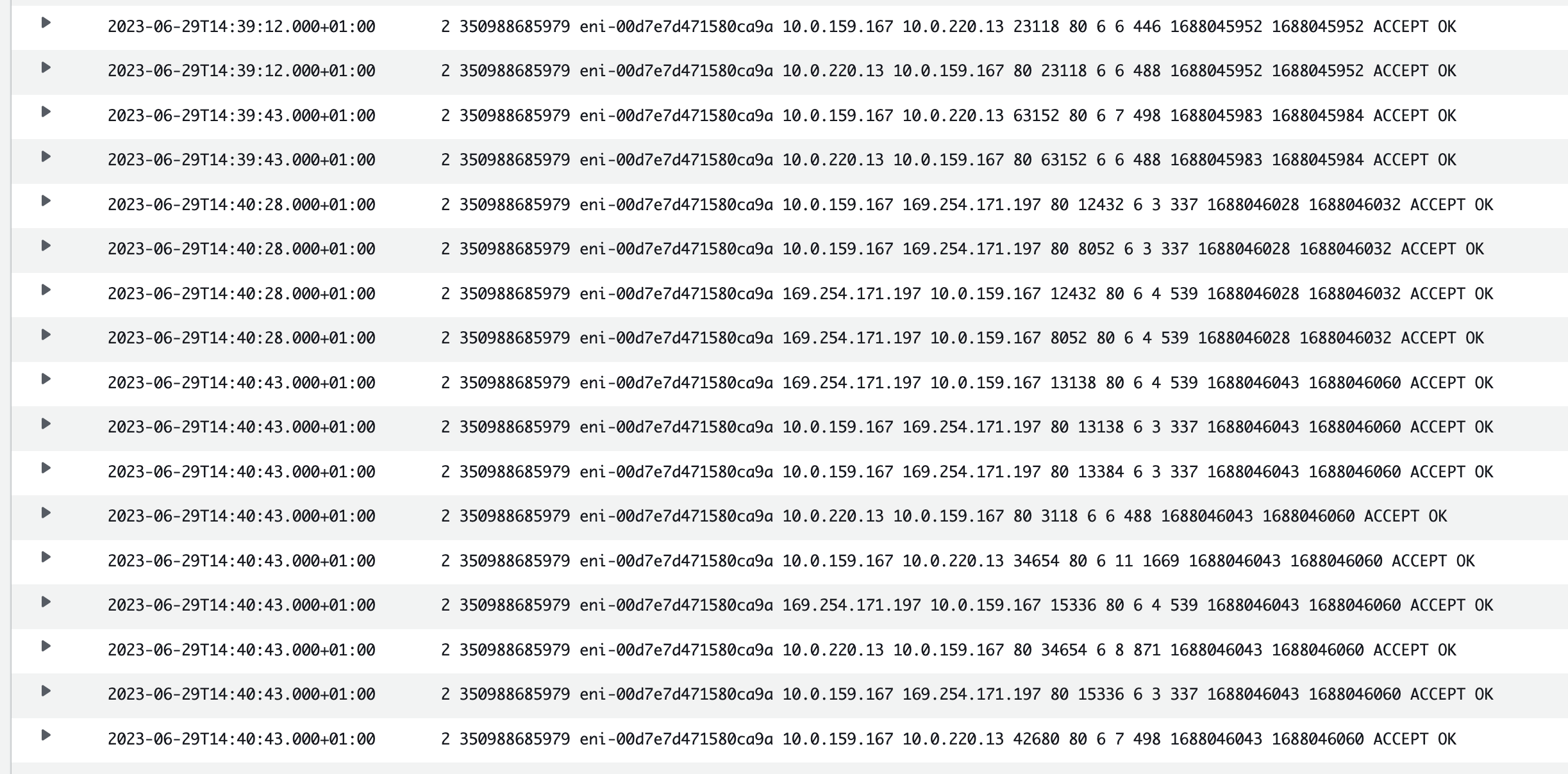

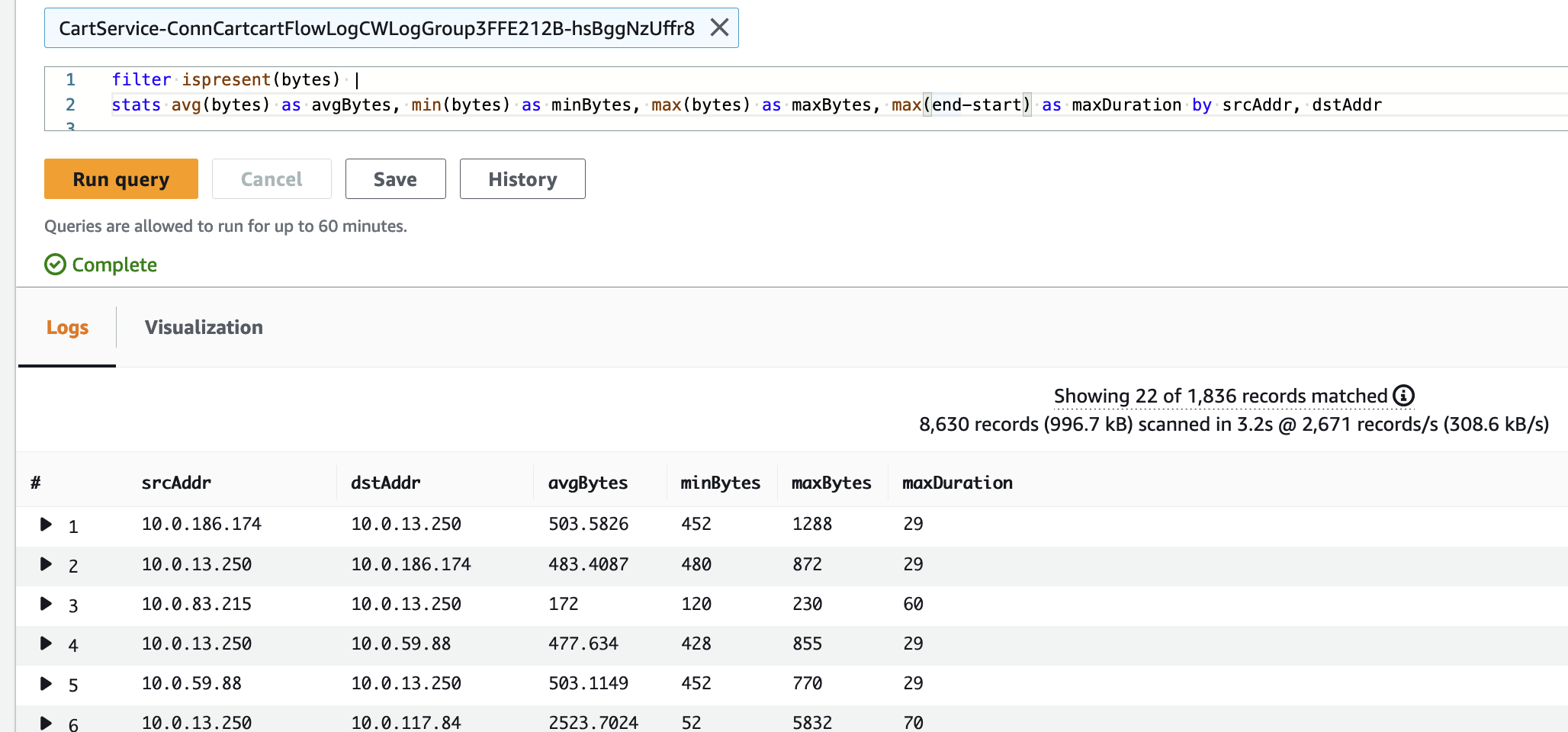

VPC Flow Logs can still be used within each VPC and will show you the flows between IPs in your VPCs and those link-local Lattice IPs.

Lattice does not provide any in-built X-Ray tracing support yet. Its endpoint won’t add any trace IDs and you have to rely on the centralised log to see the Lattice latency.

How does it scale?

Lattice works across multiple accounts but only within one region. This is generally acceptable for this kind of internal, east-west communication. Most of the limits are soft quotas that can be increased. By default, you can have, per account and within each region:

- 10 service networks

- 500 services

- 500 target groups

- 500 VPC associations per service network

- 5 target groups per service

- 1,000 targets per target group

Per Availability Zone (AZ), you get:

- 10 Gbps bandwidth

- 10,000 request per second

AWS Lambda and Lattice

We mentioned that services do not have to be in a VPC to provide a Lattice Service. Specifically, if you are using Lambda targets, and those functions are not consuming any other Lattice Services, they do not have to be configured with VPC subnets or security groups. This is because Lattice provides a new Lambda trigger type, an additional synchronous HTTP event added to the list that includes API Gateway, AppSync, Application Load Balancers, and Function URLs.

Lattice event configuration for Lambda is pretty straightforward. You just specify Lambda as the Target Group type and provide a Function to invoke. Add this capability to the simple Custom DNS setup, and you end up with a much simpler way to implement private APIs with custom DNS compared to API Gateway. Of course, you are missing the other advanced features of API Gateway. For an overview of the advantages of API Gateway compared to the other options, check out our podcast episode on that topic.

The event payload for Lattice is different to the types used for ALB and API Gateway, but not drastically so, so if you are using one of those triggers and want to switch over, you have a small bit of work to do. Here’s an example of a Lambda event from our demo application:

{

"raw_path": "/items",

"method": "POST",

"headers": {

"content-type": "application/json",

"content-length": "0",

"x-forwarded-for": "10.0.0.10",

"accept-encoding": "identity",

"user-agent": "python-urllib3/1.26.16",

"host": "service.cart.awsbites.click",

"x-amz-content-sha256": "UNSIGNED-PAYLOAD"

},

"query_string_parameters": {},

"body": "",

"is_base64_encoded": false

}💰What’s the damage?

It all boils down to a trade-off between the engineering time and communication-overhead/strife saved from not having to work with existing IP routing mechanisms. If you have 1000s of microservices, it might not be worth the cost overhead, but for a smaller number of services and VPCs, it seems well worth it.

VPC Lattice pricing has three dimensions – per service/hour, per GB/hour, and per request. At the time of writing, this equated to:

- 2.5¢ per service per hour

- 2.5¢ per gigabyte per hour

- 10¢ per million requests

In terms of traffic pricing, this is somewhat comparable to Transit Gateway pricing, but significantly more than PrivateLink. Of course, both of those have a higher cost per attachment/endpoint, so the comparison will come down to the number of services and usage. VPC Peering has no associated cost, and the cost of an existing service mesh implementation may also be significantly lower.

Hands on with Lattice - Let’s Build!

Now that we know what it is, let’s think about a real-world use case for Lattice. Suppose you have an eCommerce platform called *Nile* that aims to be the number one global outlet for non-essential products by being completely consumerism-obsessed.

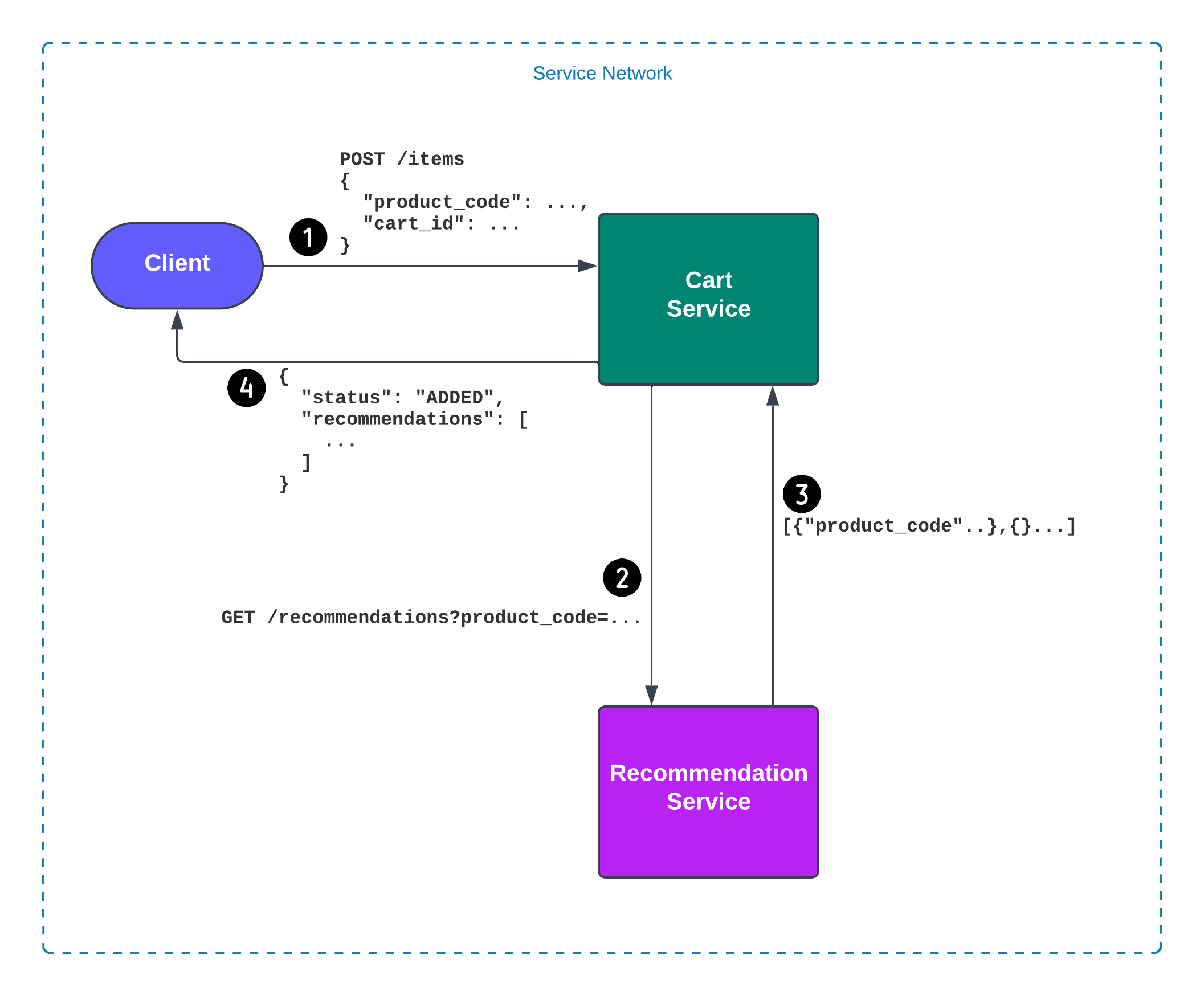

Within your platform, you have a Shopping Cart service and a Recommendation Service.

Every time an unsuspecting customer adds a product to their shopping cart, you want to hit them instantly with a set of related product recommendations to encourage them to buy more stuff they don’t need.

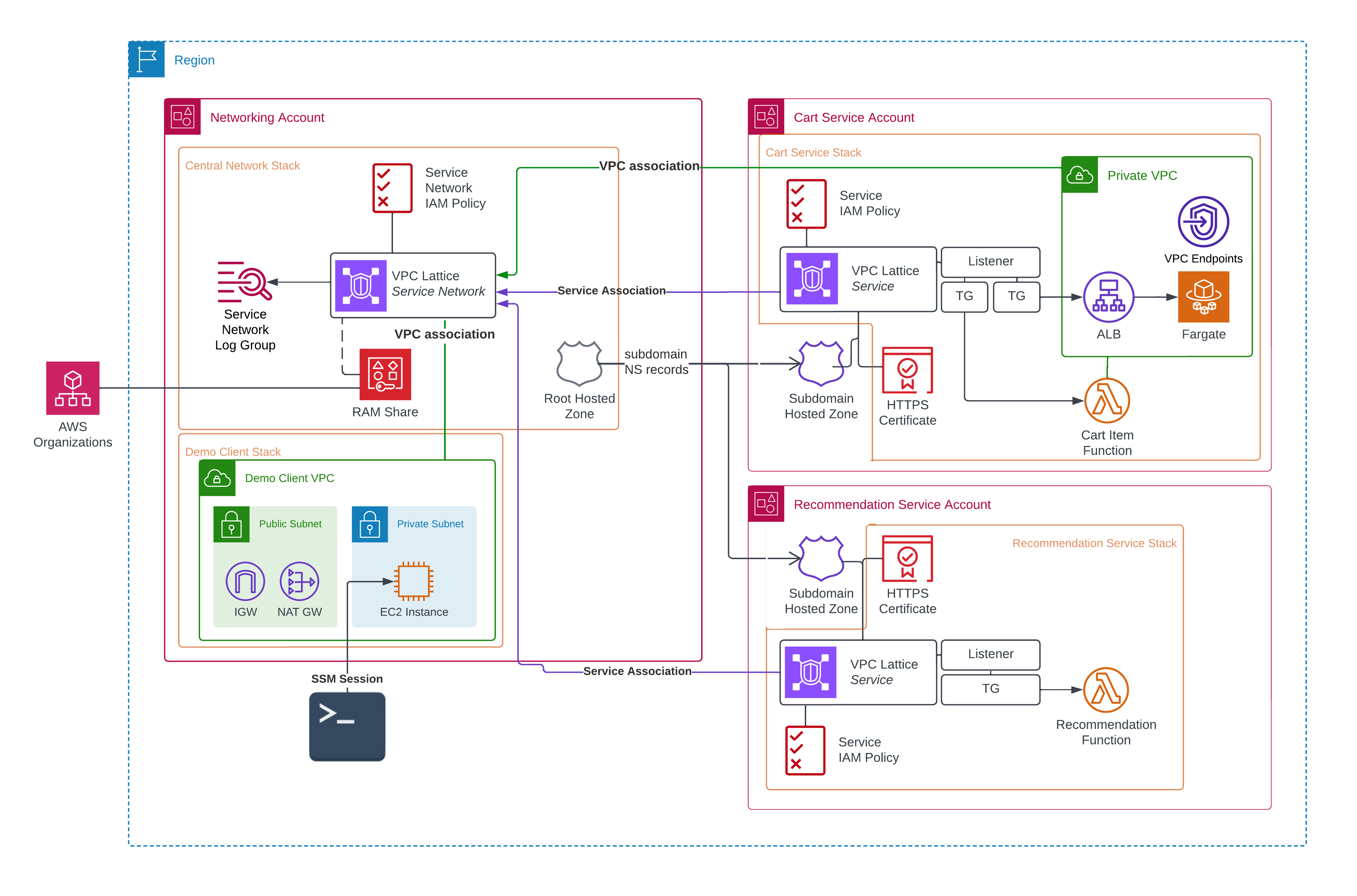

Our Cart service and Recommendation are run by separate teams and deployed to separate AWS accounts. Meanwhile, the austere and fun-destroying network admin team wants to enforce boring concepts like security and auditing. To make things smooth, we want to use a custom DNS naming convention for all services.

The public facing API that would expose the cart service to a public network is out of scope of this application. This could be a hosted API Gateway, reverse proxy or GraphQL server running on a separate container or instance.

All AWS resources are defined in CDK Stacks using TypeScript. Application logic is written in Python. In a real environment, we would probably have separate CDK applications and maybe separate code repositories too. For simplicity, we will keep all stacks in the one CDK application but define separate stacks for different accounts.

Our sample application is available with complete source code on GitHub.

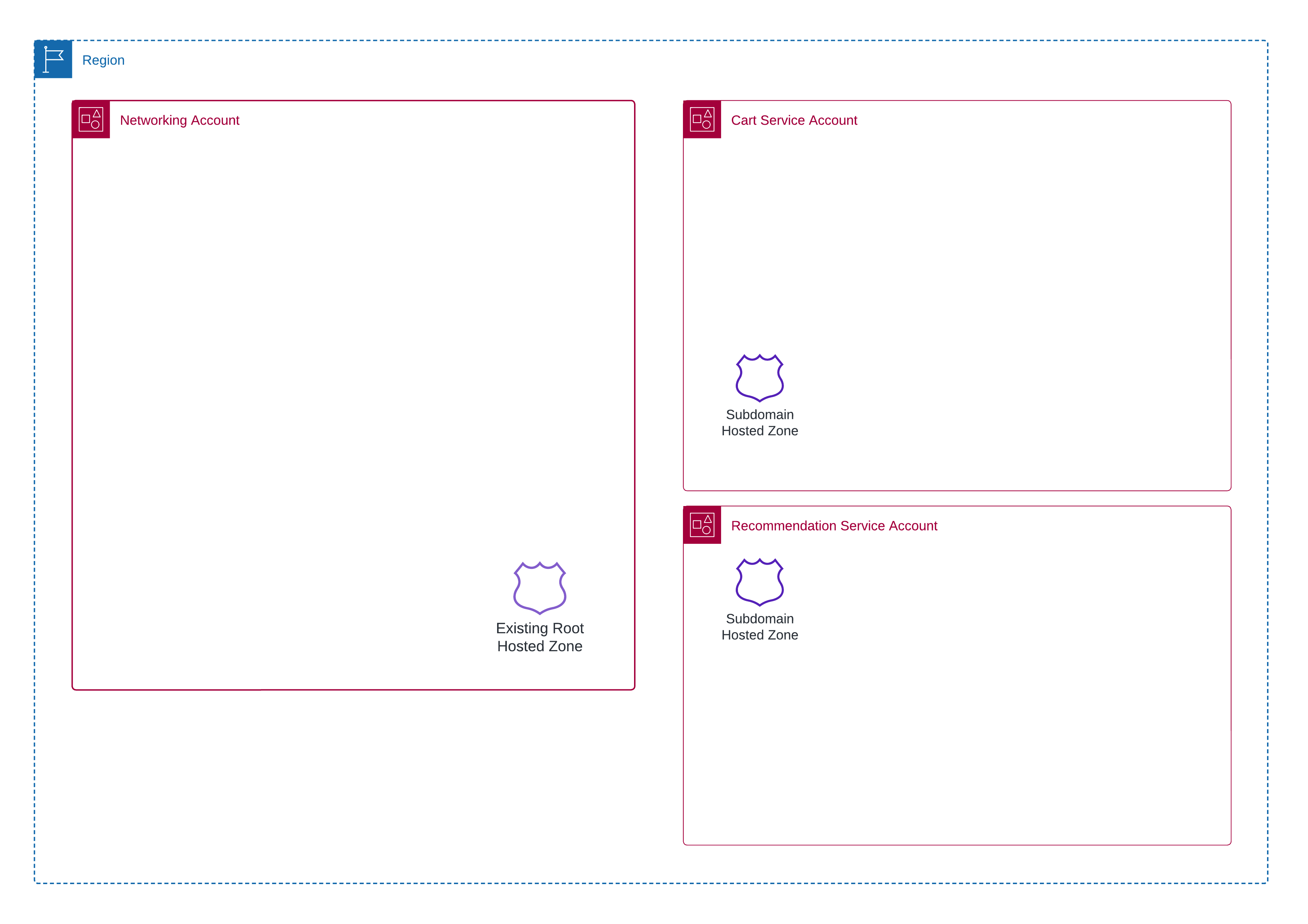

DNS Setup

For simplicity, we start with a pre-registered domain in the networking account. You have the option to use private hosted zones but since that requires extra steps, let’s stick to public hosted zones for this demonstration, We will set up some delegate name servers in each service owner’s account. If your root domain is example.com, we would set up:

recommendation.example.comcart.example.com

The first step in the deployment is to create the Route53 HostedZones in each service account. In our code example, this is done through the BaseNet stacks. This will allow us to later add nameserver (NS) delegation from the root DNS in the networking account. By taking this approach, development teams can add multiple DNS records for their services, while the admin account remains responsible for the root domain.

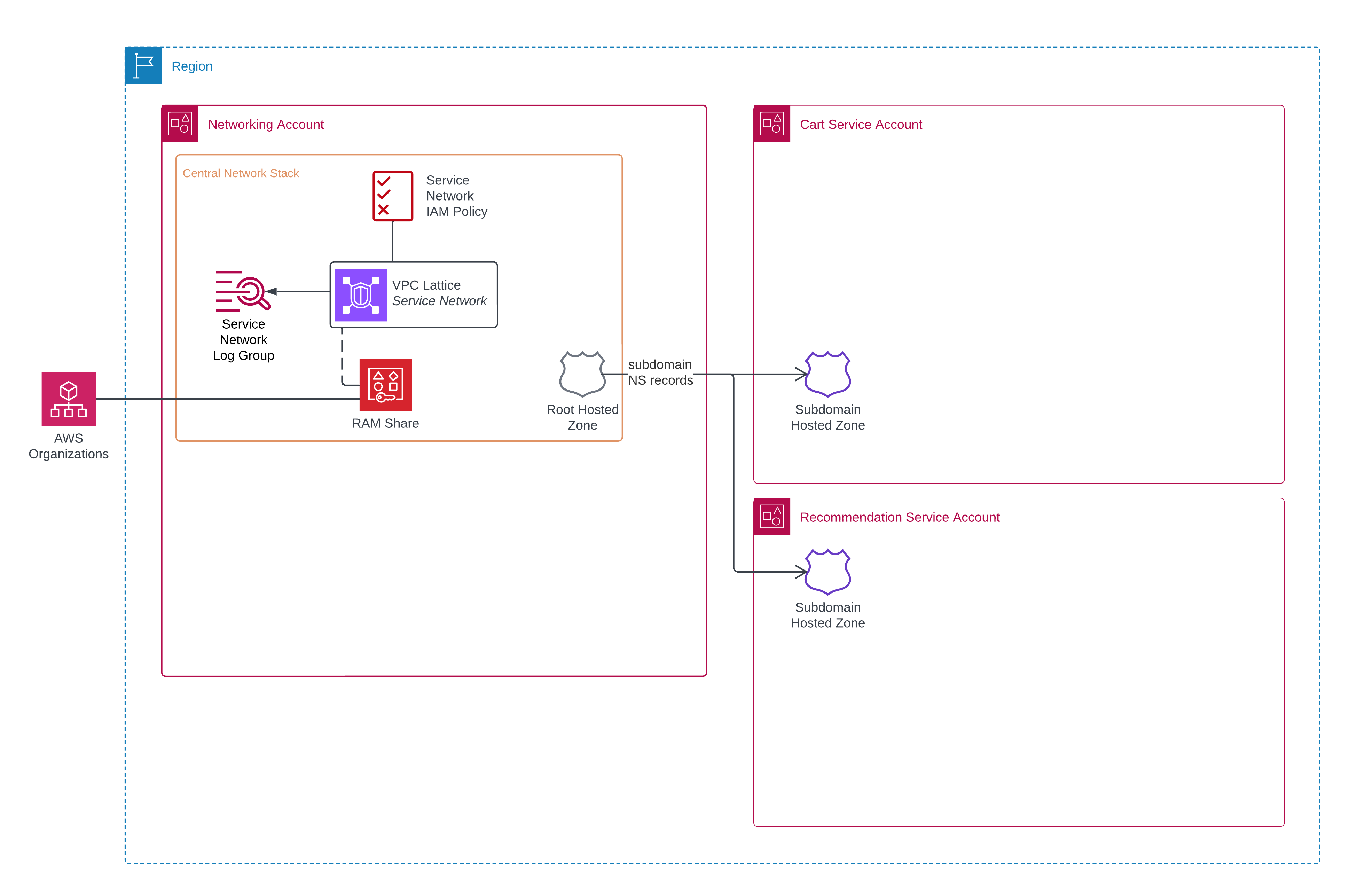

Central Network Resources

Now, we can create the network admin’s core resources to support VPC Lattice. This includes

- The Service Network

- Service Network Logging

- Authorisation Policy

- RAM Share for the Service Network so any account in the AWS

- Organisation can use it. We could make this less open and opt for specific accounts, users or roles if we choose.

- DNS RecordSets to delegate subdomains to the name servers created in the previous step.

There is luckily very little complex configuration here. All resources are defined in the CentralNetwork stack.

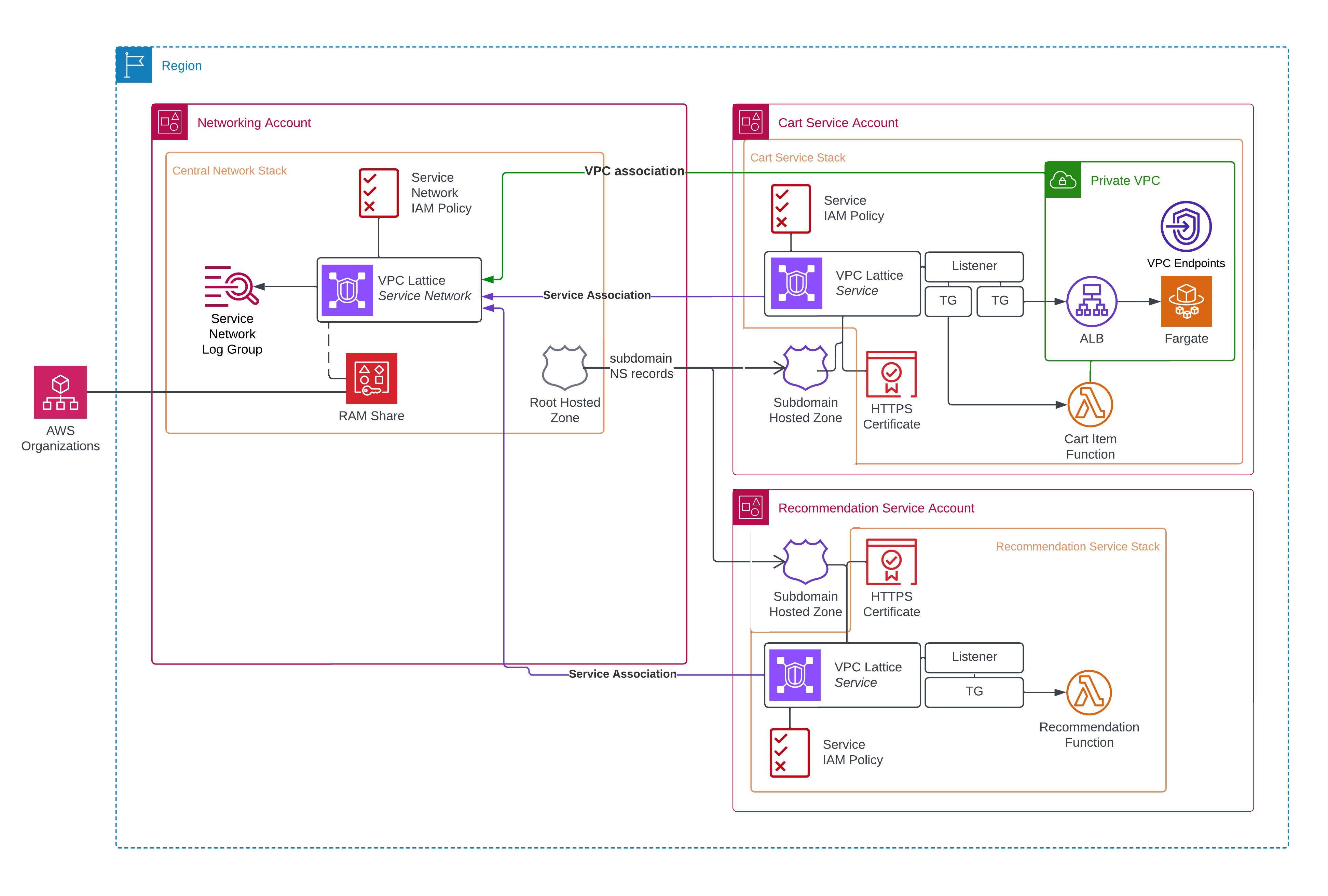

Deploying the Cart and Recommendation Services

Now that we have the central, network administration resources set up, we can create the two services in their respective accounts and associated their services with the Service Network that has been shared using RAM. The service network identifier is retrieved from the Central Network Stack’s CloudFormation outputs.

The recommendation service is the simpler of the two, having a single target group backed by Lambda. The cart service has two target groups, backed by Lambda and Fargate. This illustrates how you might shift traffic from one technology to the other as part of a re-architecture, without having to disrupt API consumers.

Putting it all Together

To test our application end-to-end, we’ll need a client in a VPC associated with the Service Network. We use a separate DemoClient stack for this, where we create a VPC with public and private subnets. The reason for having any public subnets at all is just so we can install packages from the client EC2 instance. We will make requests from the EC2 instance using an SSM session.

You can try this for yourself by following the instructions in the the repository’s README.

Once you have set up the full stack, you can follow the README instructions to make some requests, adding some products to the stack. Once you have done that, you can see the requests being logged in the Service Network Log Group in the networking account.

You can also see that we still have VPC Flow logs!

Once you have finished, don’t forget to destroy all demo resources using the cleanup instructions in the README. These stacks include Lattice Services, VPC Endpoints and event a 🙈 NAT Gateway so you will incur a cost per hour.

Wrapping Up

Lattice is quite polished for a new service. There are some additions we would like to see however, such as:

- Direct integration with ECS tasks rather than having to use an Application Load Balancer

- Integration with public-facing APIs, such as an API Gateway, AppSync, or Load Balancers, so you can use Lattice internally with a proxy/federation layer for consumers

- Full support for tracing with X-Ray

- Tools for troubleshooting IAM authorisation failures. It does not seem to be supported in the IAM Policy Simulator.

Overall, VPC Lattice offers a really neat way for AWS networking administration and development teams to simplify API communication between services or microservices. It provides strong, fine-grained authorisation, minimising the amount of network configuration, and enabling migration from different compute backends. With VPC Lattice, teams can create and manage services without the usual IP network setup and use IAM resource-based policies for finer-grained security policies. Load balancing, custom DNS, and centralised logging and auditing of traffic are really nice features to have out of the box. While it does have associated costs, VPC Lattice can be a valuable tool that reduces the total engineering cost and saves a lot of time in the long run.

It all boils down to a trade-off between the engineering time and communication-overhead/strife saved from not having to work with existing IP routing mechanisms. If you have 1000s of microservices, it might not be worth the cost overhead, but for a smaller number of services and VPCs, it seems well worth it.

Thanks to Conor Maher and Luciano Mammino for their contributions and feedback to this article.

If you have a question or need help with your AWS projects, don’t hesitate to reach out to us, we’d love to help!