Intro

It is an emerging best practice to create a discrete AWS account per workload and per environment, two main factors have enabled this strategy:

- More and more AWS services are gaining deep integration with the AWS Organizations service and Individual services are implementing more cross-account functionality.

- More mature Infrastructure as Code tooling and patterns have made managing dozens or 100s of accounts possible.

There are many good reasons to do this. Many other clouds provide mechanisms for isolation and namespaces, in AWS the AWS account itself is the ultimate logical isolation between resources. This creates the potential for improved security posture, reduced blast radius, and simpler authorization and makes it easier to reason about resource limits. There is a good AWS Bites episode about common patterns for organising AWS Accounts.

It has become common practice for teams to use more managed services and serverless technologies, it is not out of the ordinary for teams or even individual engineers to have an AWS account in the organisation. What is meant by “local development” is shifting, instead of being able to build and test an entire application locally, teams are often building services that leverage over a dozen managed services, business logic can be encoded in Step Functions, permitted access patterns can be encoded in IAM and the interaction between these services and their orchestration is defined in Terraform, CDK, SAM, Serverless Framework, Chalice etc. One of the most reliable methods to develop and test these applications is against real AWS accounts. Empowering engineers by giving them access to their own AWS account allows them to develop against the cloud with a tight feedback loop, it’s essential for supporting modern cloud application development in my opinion.

There is still significant overhead maintaining multi account strategies and providing good developer experience. In this blog post, we will explore some simple patterns for sharing centrally managed development resources to an arbitrary number of other development AWS accounts. We are going to make an Aurora Postgres cluster available by sharing a VPC subnet using AWS Resource Access Manager (RAM) utilising its integration with AWS Organizations. The pattern we are going to demonstrate is very cost-effective, having development accounts per engineer could be cost prohibitive without an approach like this.

Overview

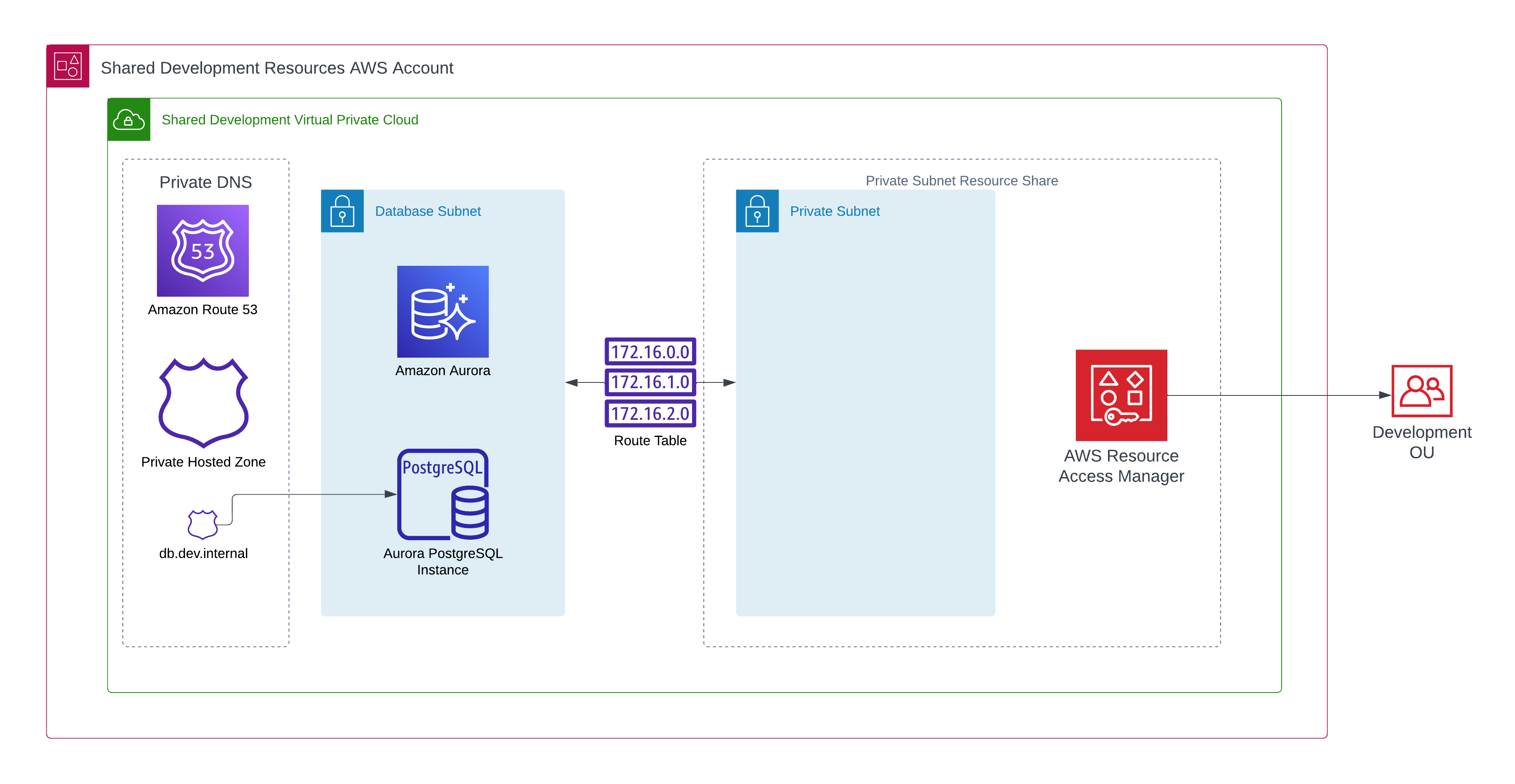

Here’s what our centrally managed development resources look like, they live in a single AWS account called Shared Development Resources AWS Account.

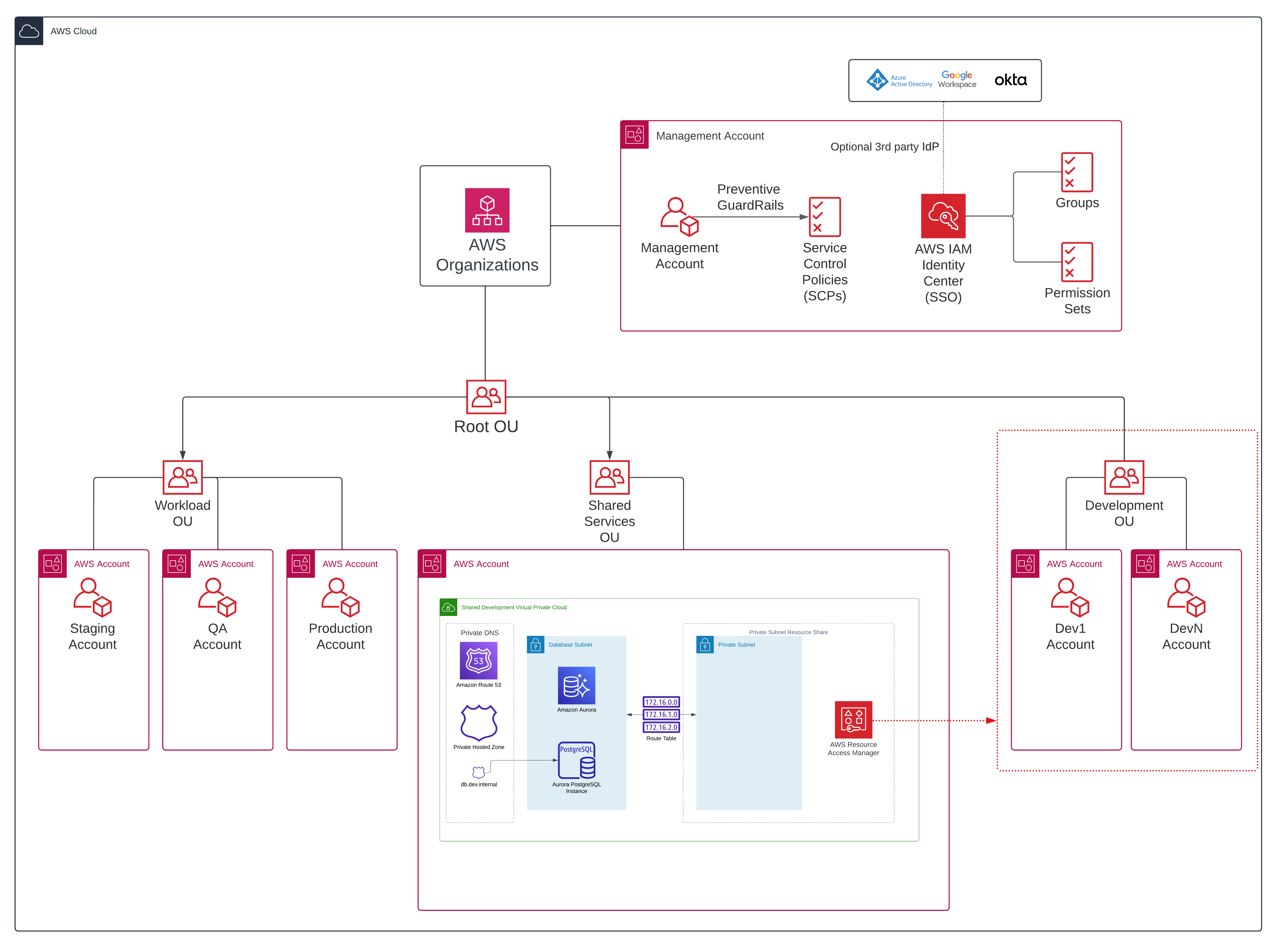

Let’s take a top down look into how this fits into the wider AWS Organization, we can see our Shared Development Resources AWS Account, it resides in a Shared Services OU and shares to a Development OU

What about VPC Peering?

That is a good question, VPC Peering is a robust, tried and tested pattern. But VPC Peering is unnecessarily complex for this use case, it would require each development account to manage a VPC, we would need to ensure there are no overlapping CIDRs and it would need much more complex automation as the shared services account would need to be made aware of new development accounts, create a VPC peering request and then the development account would need to accept this peering request. By using RAM to share the subnet with a development Organizational Unit, the subnet is available in all current and future accounts with no additional automation or overhead.

Complexity

As mentioned above managing a VPC per account can be complex. Using this pattern, a central cloud team can manage the VPC and Datastores. Our engineers just want to consume a service that exists in a VPC, we want the VPC to be opaque. Many modern serverless architectures are also “networkless” (don’t @ me) comprised of Lambda Functions, Eventbridge, Step Functions, DynamoDB, API Gateway etc. VPCs may only enter the picture when access to more traditional or serverful components is required (like an EC2 instance) or there are requirements around where network traffic flows. In this hypothetical example, only a handful of Lambda functions may require access to the Aurora Cluster, those functions can be deployed in a subnet in the VPC, and the rest of the architecture can remain outside of a VPC. Similarly, we want to avoid the complexity of each engineer needing to deploy and maintain an Aurora Cluster.

Cost

There are significant cost benefits to this approach. Private Subnets require a NAT Gateway to provide internet connectivity, you may also want to deploy VPC Endpoints to provide access to AWS resources without traversing the public internet, VPC Endpoints have their own cost considerations. This pattern allows us to share a single NAT Gateway and a single Aurora Cluster with dozens of development AWS accounts. I have successfully used this pattern with 20+ accounts.

In the eu-west-1 region a db.t3.medium Aurora Postgres Instance costs $0.088 per hour and a NAT Gateway costs $0.048 per hour. An AWS month ™️ is 730 hours which would be $99.28. So for just a hair under $100 we can manage our shared VPC, NAT Gateway, Aurora cluster etc. If we were to manage this per development account along with the additional management overhead we would be paying $2000 per month just for the baseline development infrastructure!

Terraform Repo

Terraform is an excellent way to manage core infrastructure patterns like this. There is an accompanying GitHub repo for this blog post. It has 3 terraform examples.

The organization example creates an AWS Organization with Trusted Access for Resource Access Manager enabled, it also creates Organizational Units for development and shared services and adds sensible guardrails to the accounts in the development OU using an SCP.

shared creates the resources for the shared development resources account, the VPC, the Aurora Cluster, the private Route53 hosted zone and configures Resource Access Manager to share the private subnet into the Development Organizational Unit.

The developer_account example just deploys a simple EC2 instance into the private subnet that is shared into a developer account, we will use SSM Session Manager to connect to this EC2 instance and demonstrate the private DNS resolution and connectivity to the shared cluster.

We are using terraform community modules which are battle-tested modules that abstract away a lot of heavy lifting.

Building the Solution

Step 0 - AWS Organization Configuration

Trusted Access for RAM in AWS Organizations must be enabled. If you are managing your AWS Organization using terraform you can add the ram.amazonaws.com service principal.

resource "aws_organizations_organization" "management" {

aws_service_access_principals = [

"cloudtrail.amazonaws.com",

"config.amazonaws.com",

"sso.amazonaws.com",

"ram.amazonaws.com",

]

enabled_policy_types = [

"SERVICE_CONTROL_POLICY",

]

feature_set = "ALL"

}Alternatively, you can use the AWS CLI and run: aws ram enable-sharing-with-aws-organization.

We then need a shared development resources account, this is where the VPC and Aurora cluster will be deployed. We are placing this in a shared_services Organizational Unit, you could have other shared services accounts in this OU that provide networking or other core infrastructure services.

resource "aws_organizations_organizational_unit" "shared_services" {

name = "shared_services"

parent_id = aws_organizations_organization.management.roots[0].id

}

resource "aws_organizations_account" "shared_development" {

email = "cloud-team+shared-development@acme.com"

name = "acme-shared-development"

parent_id = aws_organizations_organizational_unit.shared_services.id

tags = {

environment = "shared-development"

}

lifecycle {

prevent_destroy = true

}

}For the purposes of the demo, we also need at least one development account. You could be creating these with Control Tower, org-formation, custom tooling, or manually. In this demo, we are going to continue with terraform.

resource "aws_organizations_organizational_unit" "development" {

name = "development"

parent_id = aws_organizations_organization.management.roots[0].id

}

resource "aws_organizations_account" "dev1" {

email = "cloud-team+dev1@acme.com"

name = "acme-dev1"

parent_id = aws_organizations_organizational_unit.development.id

tags = {

environment = "dev1"

}

lifecycle {

prevent_destroy = true

}

}In the demo repo you’ll also see that we create a Service Control Policy and apply it to the Development OU. Rather than attempt to create restrictive developer roles and policies, a common pattern is to give engineers administrator level access in their own development account, we use SCP to provide some guardrails such as denying the ability to leave the Organization, denying the ability to create IAM Users and Access Keys and denying the ability to disable common detective controls. Again this about empowering engineers and improving the developer experience, rather than needing to contact a central cloud team for additional privileges, we can allow a high level of flexibility, this is another advantage of the multi account strategy. It also makes sense to implement good budget alarms to keep an eye on spend.

Step 1 - Create Shared Resources

Now that we have our AWS Organization configured we can create the shared development resources, we create a VPC with database subnets and private subnets, and we create an Aurora Cluster in that VPC. We also create a private Route53 Hosted Zone which allows our development accounts to refer to the shared database as db.dev.internal. DNS resolution works because the development resources will be deployed in a subnet that is part of a VPC with a Private Hosted Zone.

resource "aws_route53_zone" "private" {

name = "dev.internal"

vpc {

vpc_id = module.vpc.vpc_id

}

tags = {

Terraform = "true"

Environment = "shared-dev"

}

}

resource "aws_route53_record" "rds_endpoint" {

name = "db"

type = "CNAME"

ttl = 60

zone_id = aws_route53_zone.private.id

records = [module.db.rds_cluster_endpoint]

}We’re now ready to share a subnet. We are going to share the private subnet of the VPC with the development Organizational Unit we created.

Step 2 - Share Resources!

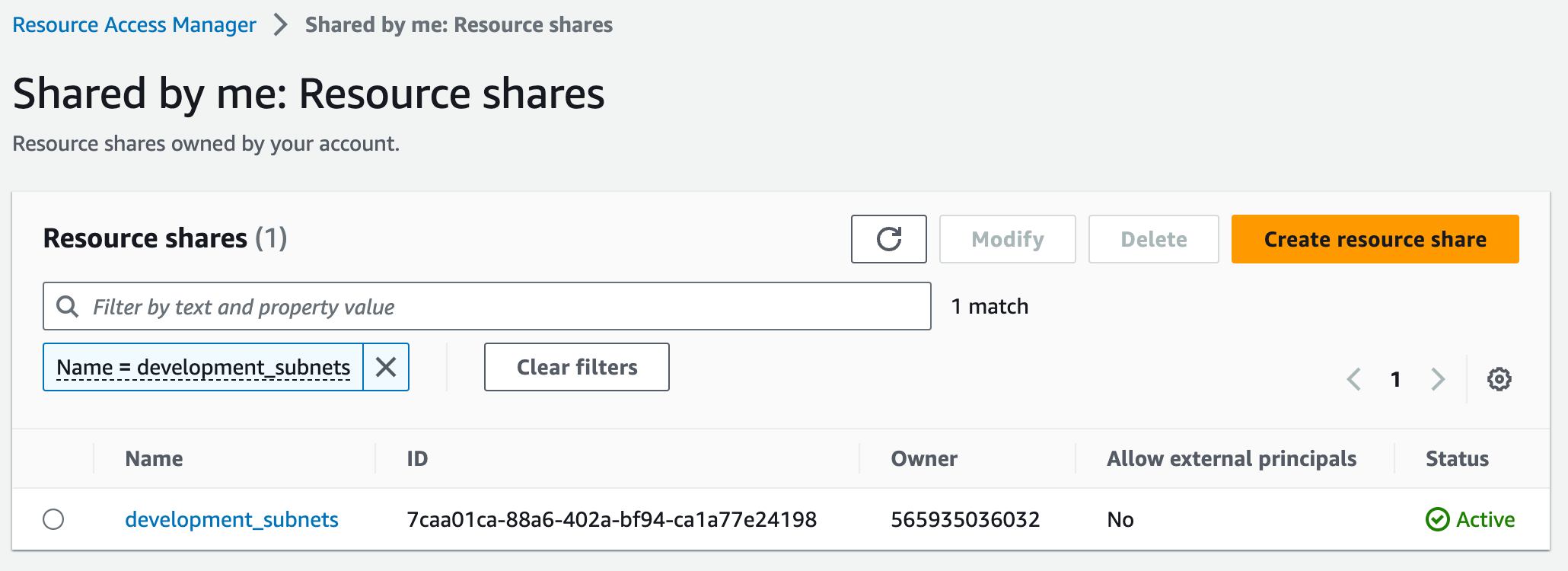

The core concept in AWS Resource Access Manager is the resource share, this represents a list of resources to share and the principal that the resources are shared with.

This is pretty straightforward, we create the resource share, we don’t want external principals as we are sharing to an OU within our organization. We use the powerful terraform meta-argument for_each to create a RAM resource association for each of our private subnets (multiple availability zones) and finally we associate the principal of our development Organizational Unit.

resource "aws_ram_resource_share" "development_subnets" {

name = "development_subnets"

allow_external_principals = false

}

resource "aws_ram_resource_association" "development_subnets" {

for_each = toset(module.vpc.private_subnet_arns)

resource_arn = each.value

resource_share_arn = aws_ram_resource_share.development_subnets.arn

}

resource "aws_ram_principal_association" "development_ou" {

principal = var.development_ou_arn

resource_share_arn = aws_ram_resource_share.development_subnets.arn

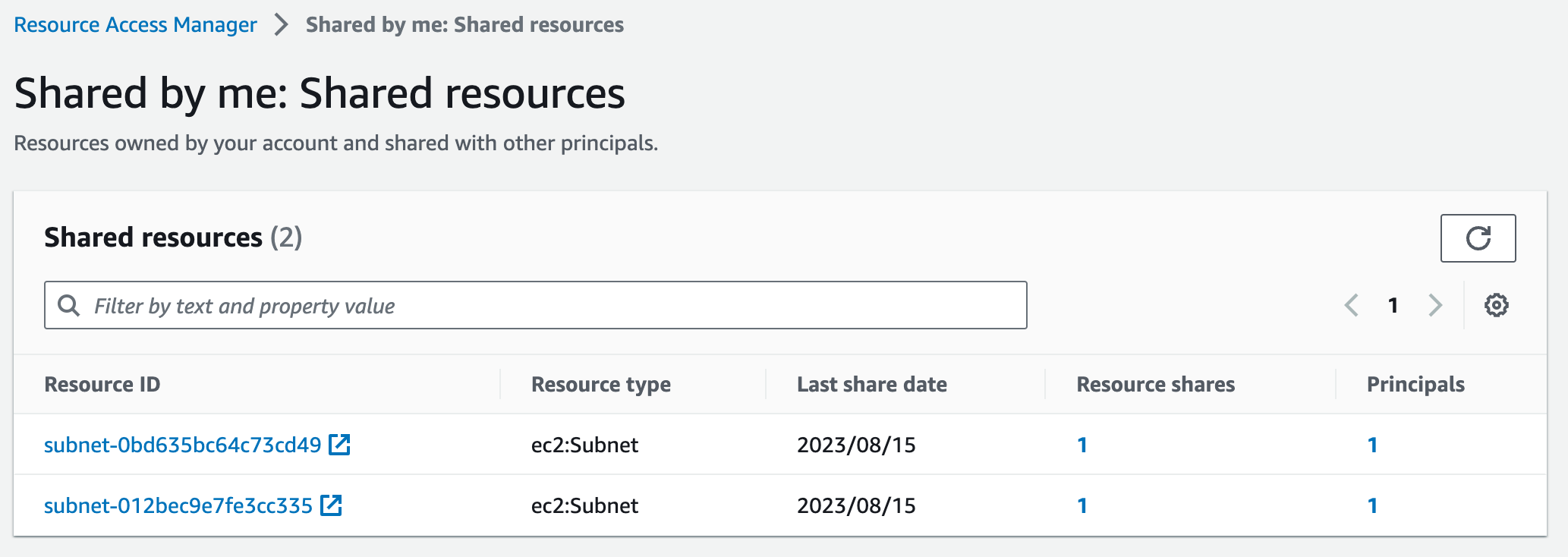

}In the shared development resources account we can now see the development_subnets resource share.

We can see the resources we have associated with that share:

And the principal we are sharing with:

Step 3 - Kick the tyres from a development account

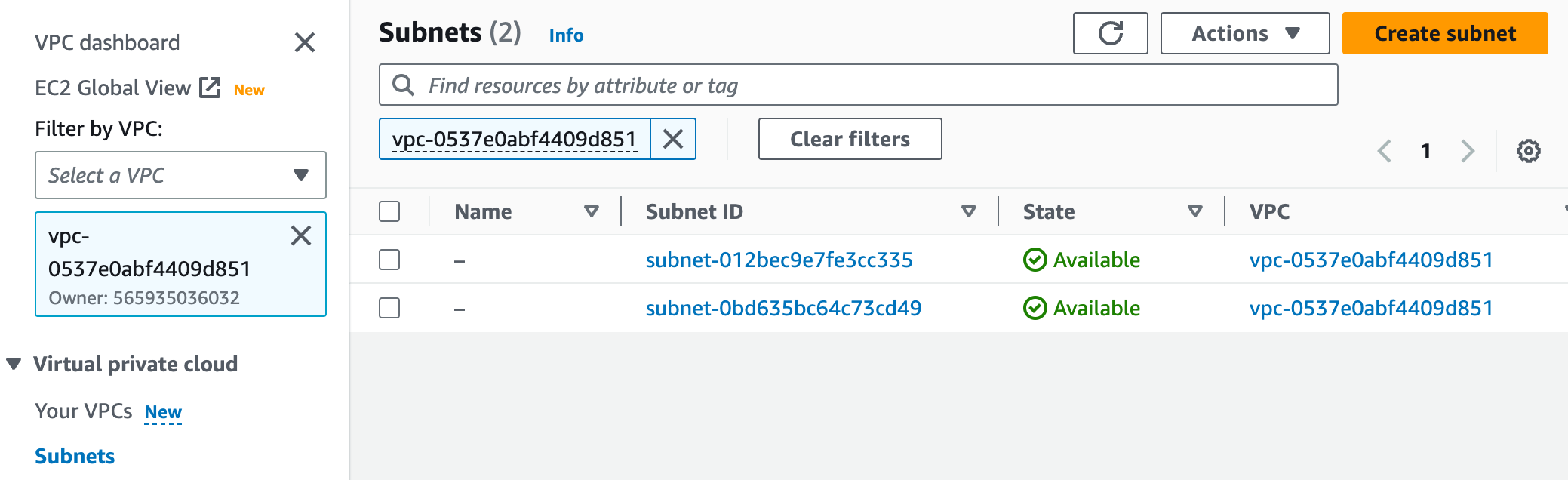

In all accounts in the development OU if you head to the VPC Console you will see the shared VPC, you can identify the shared VPC as the Owner will be the account ID of your shared development resources account.

You have limited insight into the shared VPC, you can see its route tables, Internet Gateways etc. But you cannot see NAT Gateways and you cannot see any Security Groups that exist in the VPC that the owner or other accounts have created. This is a little unusual but makes sense, RAM allows you to share a subnet but not security groups, which means you can create security groups in the receiving account but in the context of the shared subnet’s VPC. You cannot see or use security groups in the VPC owner account, however, you can reference them as a source or destination in any security group rules you create in the current account.

I like to think of it as the subnet being projected into the development accounts. In this particular environment we have configured the database security group to allow ingress from the private subnet CIDR, so we don’t have to worry about creating security group rules that reference source security groups across accounts.

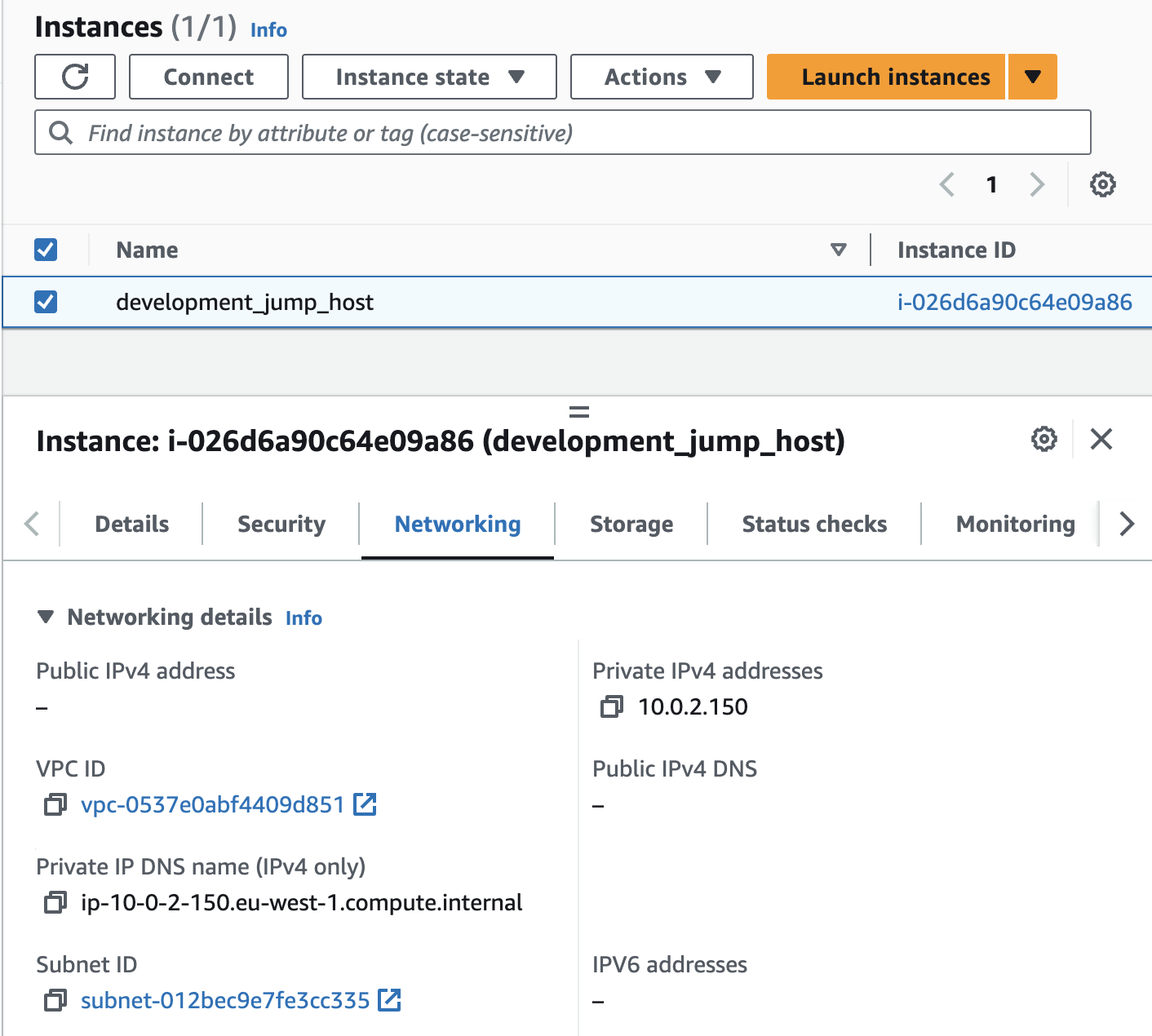

Let’s create an EC2 instance in the shared subnet so we can explore.

When you run the developer_account terraform it will create an EC2 jump host which has an instance profile / IAM role with the managed policy AmazonSSMManagedInstanceCore attached. This allows us to connect easily using SSM Session Manager, navigate to the EC2 Console and find the development_jump_host instance.

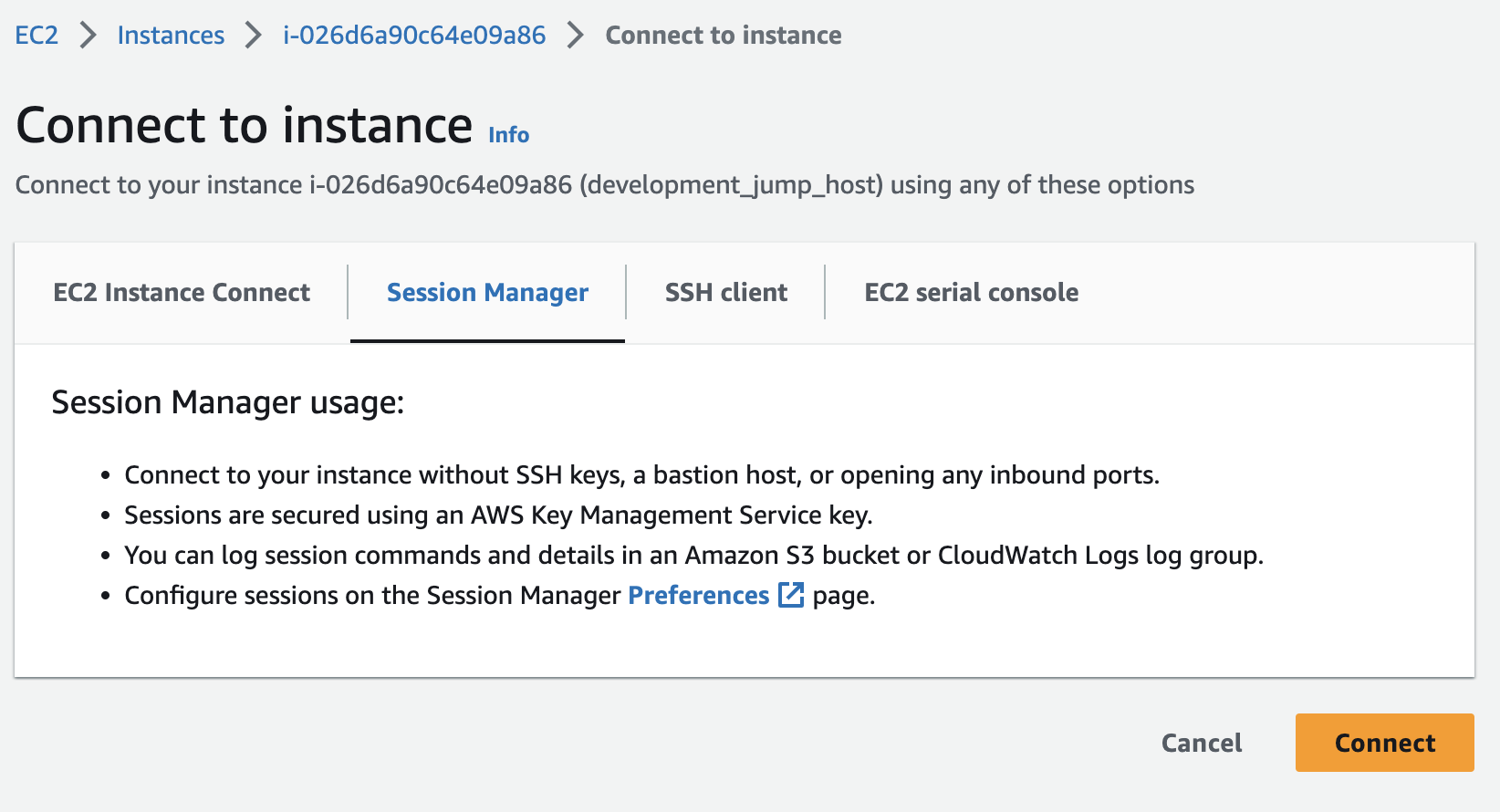

You can then choose Session Manager and Connect

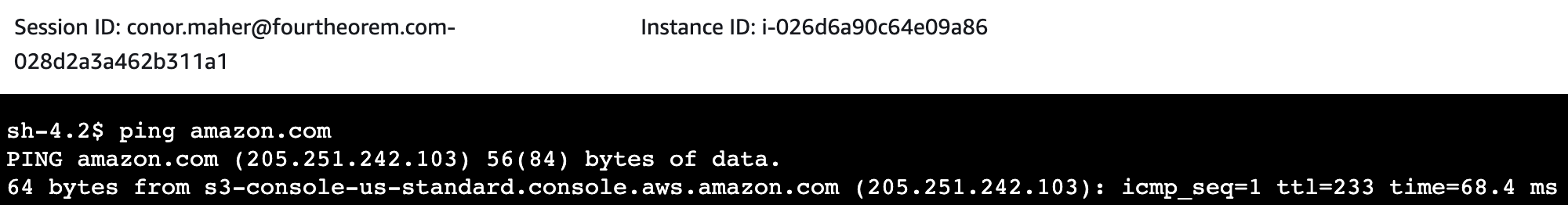

We now have a shell session on an EC2 instance that is deployed into a private subnet that is owned and completely managed by the shared development resources account. This instance has no public IP but it has internet connectivity via the NAT Gateway in the shared VPC so we can establish our session using SSM, this is a great pattern because it does not require a bastion host or listening on port 22 on a public IP and we do not need to manage SSH keys.

We can confirm the outbound internet connectivity:

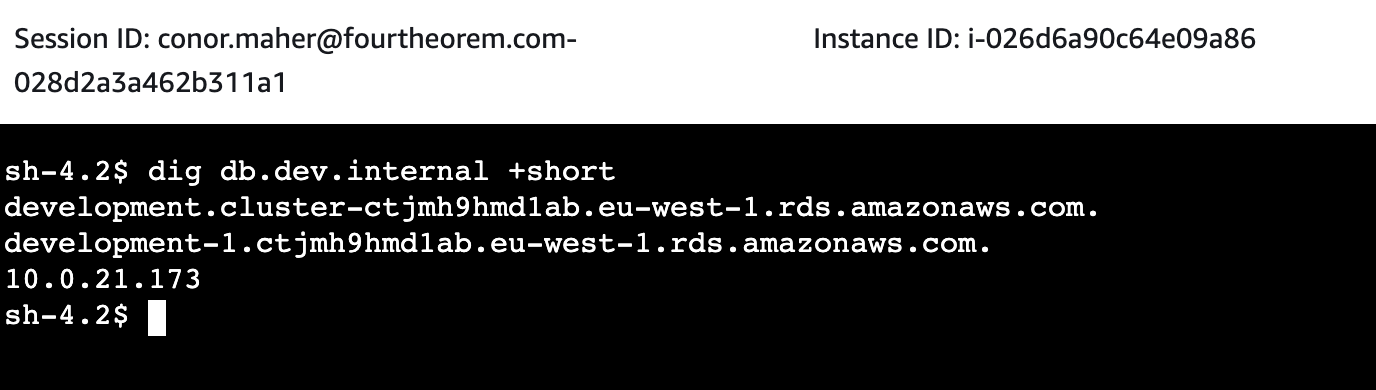

We can now test DNS resolution, i.e we can resolve the CNAME record that was created in the private Route53 Zone in the VPC in the shared development resources account.

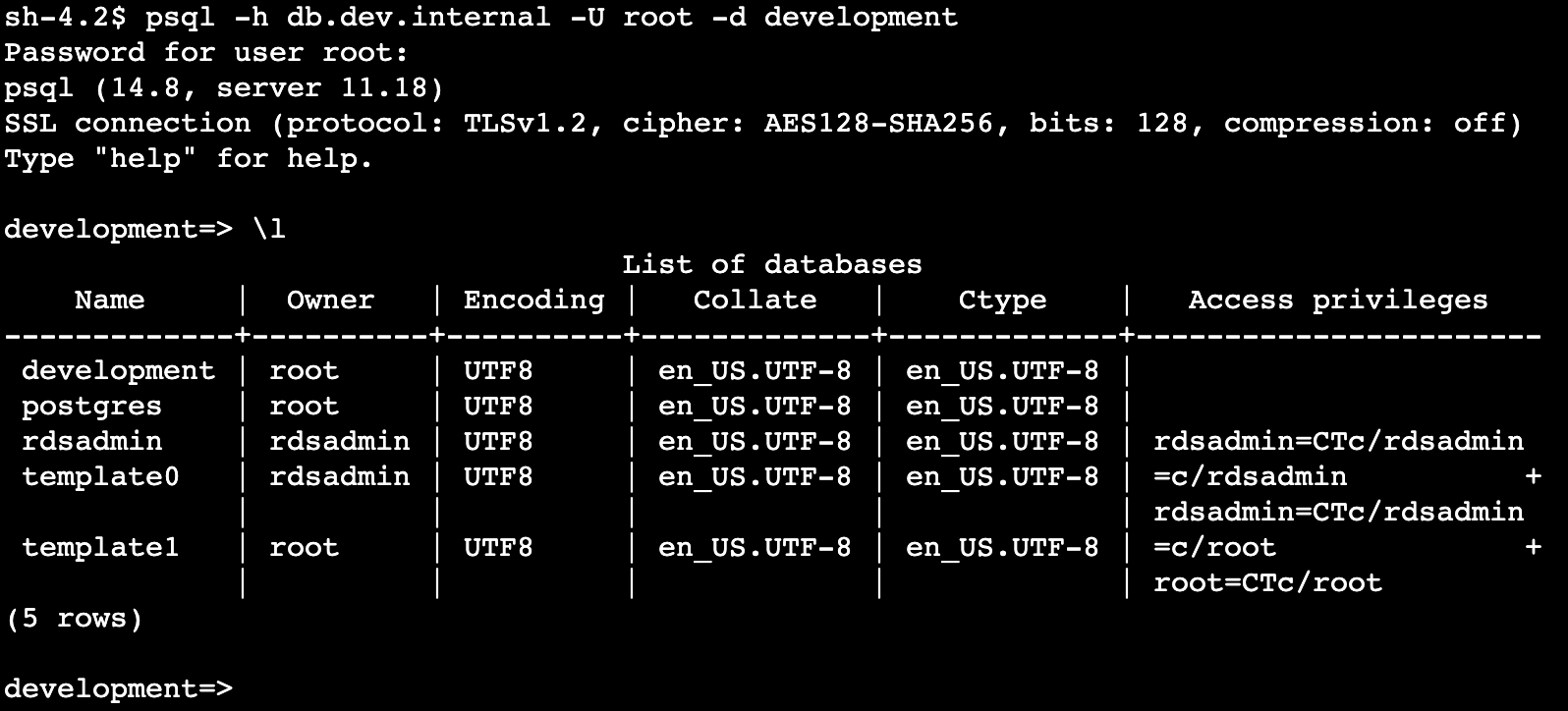

Finally let’s ensure our connectivity to the Aurora Cluster is working. We can install the psql client and connect, the demo terraform outputs the cluster password when you create the shared services.

sudo amazon-linux-extras install postgresql14

psql -h db.dev.internal -U root -d development

Closing

We just explored RAM subnet sharing as a development pattern, but this pattern will also work for sharing any resource that resides in a subnet in one VPC into other accounts or organisational units. In some environments, you could avoid VPC Peering, Transit Gateways, and other complex topologies and use this pattern to allow access to any centrally managed resource that is deployed in a subnet.

- RDS

- Redshift

- Elasticsearch

- Elasticache

- Load Balancer

If you are considering a cross-account pattern like this for HTTP or gRPC workloads check out our recent VPC Lattice Blog Post.

If you have followed along remember to destroy the resources you created 💸. You can do this by running terraform destroy in each of the examples you have deployed. You should destroy the development resources, then the shared resources.

I hope you enjoyed this post, sharing is caring! Keep an eye on the fourTheorem blog as we will explore some more patterns for improving developer experience and productivity in AWS in upcoming posts.

Thanks to Luciano Mammino, Michael Twomey and David Lynam for reviewing this article. Header image credit Alina Grubnyak

If you have a question or need help with your AWS projects, don’t hesitate to reach out to us, we’d love to help!